diff --git a/README.md b/README.md

index 357ed35..eaceeb9 100644

--- a/README.md

+++ b/README.md

@@ -18,3 +18,4 @@ $ git clone https://git.platypush.tech/platypush/blog.git

$ cd blog

$ madblog

```

+

diff --git a/config.yaml b/config.yaml

index b7a8475..ea9967c 100644

--- a/config.yaml

+++ b/config.yaml

@@ -2,7 +2,6 @@ title: Platypush

description: The Platypush blog

link: https://blog.platypush.tech

home_link: https://platypush.tech

-short_feed: True

categories:

- IoT

- automation

diff --git a/img/music-automation.png b/img/music-automation.png

deleted file mode 100644

index 36be419..0000000

Binary files a/img/music-automation.png and /dev/null differ

diff --git a/markdown/Automate-your-music-collection.md b/markdown/Automate-your-music-collection.md

deleted file mode 100644

index 3a54e80..0000000

--- a/markdown/Automate-your-music-collection.md

+++ /dev/null

@@ -1,1230 +0,0 @@

-[//]: # (title: Automate your music collection)

-[//]: # (description: Use Platypush to manage your music activity, discovery playlists and be on top of new releases.)

-[//]: # (image: /img/music-automation.png)

-[//]: # (author: Fabio Manganiello <fabio@platypush.tech>)

-[//]: # (published: 2022-09-19)

-[//]: # (latex: 1)

-

-I have been an enthusiastic user of mpd and mopidy for nearly two decades. I

-have already [written an

-article](https://blog.platypush.tech/article/Build-your-open-source-multi-room-and-multi-provider-sound-server-with-Platypush-Mopidy-and-Snapcast)

-on how to leverage mopidy (with its tons of integrations, including Spotify,

-Tidal, YouTube, Bandcamp, Plex, TuneIn, SoundCloud etc.), Snapcast (with its

-multi-room listening experience out of the box) and Platypush (with its

-automation hooks that allow you to easily create if-this-then-that rules for

-your music events) to take your listening experience to the next level, while

-using open protocols and easily extensible open-source software.

-

-There is a feature that I haven't yet covered in my previous articles, and

-that's the automation of your music collection.

-

-Spotify, Tidal and other music streaming services offer you features such as a

-_Discovery Weekly_ or _Release Radar_ playlists, respectively filled with

-tracks that you may like, or newly released tracks that you may be interested

-in.

-

-The problem is that these services come with heavy trade-offs:

-

-1. Their algorithms are closed. You don't know how Spotify figures out which

- songs should be picked in your smart playlists. In the past months, Spotify

- would often suggest me tracks from the same artists that I had already

- listened to or skipped in the past, and there's no transparent way to tell

- the algorithm "hey, actually I'd like you to suggest me more this kind of

- music - or maybe calculate suggestions only based on the music I've listened

- to in this time range, or maybe weigh this genre more".

-

-2. Those features are tightly coupled with the service you use. If you cancel

- your Spotify subscription, you lose those smart features as well.

- Companies like Spotify use such features as a lock-in mechanism -

- you can check out any time you like, but if you do then nobody else will

- provide you with their clever suggestions.

-

-After migrating from Spotify to Tidal in the past couple of months (TL;DR:

-Spotify f*cked up their developer experience multiple times over the past

-decade, and their killing of libspotify without providing any alternatives was

-the last nail in the coffin for me) I felt like missing their smart mixes,

-discovery and new releases playlists - and, on the other hand, Tidal took a

-while to learn my listening habits, and even when it did it often generated

-smart playlists that were an inch below Spotify's. I asked myself why on earth

-my music discovery experience should be so tightly coupled to one single cloud

-service. And I decided that the time had come for me to automatically generate

-my service-agnostic music suggestions: it's not rocket science anymore, there's

-plenty of services that you can piggyback on to get artist or tracks similar to

-some music given as input, and there's just no excuses to feel locked in by

-Spotify, Google, Tidal or some other cloud music provider.

-

-In this article we'll cover how to:

-

-1. Use Platypush to automatically keep track of the music you listen to from

- any of your devices;

-2. Calculate the suggested tracks that may be similar to the music you've

- recently listen to by using the Last.FM API;

-3. Generate a _Discover Weekly_ playlist similar to Spotify's without relying

- on Spotify;

-4. Get the newly released albums and single by subscribing to an RSS feed;

-5. Generate a weekly playlist with the new releases by filtering those from

- artists that you've listened to at least once.

-

-## Ingredients

-

-We will use Platypush to handle the following features:

-

-1. Store our listening history to a local database, or synchronize it with a

- scrobbling service like [last.fm](https://last.fm).

-2. Periodically inspect our newly listened tracks, and use the last.fm API to

- retrieve similar tracks.

-3. Generate a discover weekly playlist based on a simple score that ranks

- suggestions by match score against the tracks listened on a certain period

- of time, and increases the weight of suggestions that occur multiple times.

-4. Monitor new releases from the newalbumreleases.net RSS feed, and create a

- weekly _Release Radar_ playlist containing the items from artists that we

- have listened to at least once.

-

-This tutorial will require:

-

-1. A database to store your listening history and suggestions. The database

- initialization script has been tested against Postgres, but it should be

- easy to adapt it to MySQL or SQLite with some minimal modifications.

-2. A machine (it can be a RaspberryPi, a home server, a VPS, an unused tablet

- etc.) to run the Platypush automation.

-3. A Spotify or Tidal account. The reported examples will generate the

- playlists on a Tidal account by using the `music.tidal` Platypush plugin,

- but it should be straightforward to adapt them to Spotify by using the

- `music.spotify` plugin, or even to YouTube by using the YouTube API, or even

- to local M3U playlists.

-

-## Setting up the software

-

-Start by installing Platypush with the

-[Tidal](https://docs.platypush.tech/platypush/plugins/music.tidal.html),

-[RSS](https://docs.platypush.tech/platypush/plugins/rss.html) and

-[Last.fm](https://docs.platypush.tech/platypush/plugins/lastfm.html)

-integrations:

-

-```

-[sudo] pip install 'platypush[tidal,rss,lastfm]'

-```

-

-If you want to use Spotify instead of Tidal then just remove `tidal` from the

-list of extra dependencies - no extra dependencies are required for the

-[Spotify

-plugin](https://docs.platypush.tech/platypush/plugins/music.spotify.html).

-

-If you are planning to listen to music through mpd/mopidy, then you may also

-want to include `mpd` in the list of extra dependencies, so Platypush can

-directly monitor your listening activity over the MPD protocol.

-

-Let's then configure a simple configuration under `~/.config/platypush/config.yaml`:

-

-```yaml

-music.tidal:

- # No configuration required

-

-# Or, if you use Spotify, create an app at https://developer.spotify.com and

-# add its credentials here

-# music.spotify:

-# client_id: client_id

-# client_secret: client_secret

-

-lastfm:

- api_key: your_api_key

- api_secret: your_api_secret

- username: your_user

- password: your_password

-

-# Subscribe to updates from newalbumreleases.net

-rss:

- subscriptions:

- - https://newalbumreleases.net/category/cat/feed/

-

-# Optional, used to send notifications about generation issues to your

-# mobile/browser. You can also use Pushbullet, an email plugin or a chatbot if

-# you prefer.

-ntfy:

- # No configuration required if you want to use the default server at

- # https://ntfy.sh

-

-# Include the mpd plugin and backend if you are listening to music over

-# mpd/mopidy

-music.mpd:

- host: localhost

- port: 6600

-

-backend.music.mopidy:

- host: localhost

- port: 6600

-```

-

-Start Platypush by running the `platypush` command. The first time it should

-prompt you with a tidal.com link required to authenticate your user. Open it in

-your browser and authorize the app - the next runs should no longer ask you to

-authenticate.

-

-Once the Platypush dependencies are in place, let's move to configure the

-database.

-

-## Database configuration

-

-I'll assume that you have a Postgres database running somewhere, but the script

-below can be easily adapted also to other DBMS's.

-

-Database initialization script:

-

-```sql

--- New listened tracks will be pushed to the tmp_music table, and normalized by

--- a trigger.

-drop table if exists tmp_music cascade;

-create table tmp_music(

- id serial not null,

- artist varchar(255) not null,

- title varchar(255) not null,

- album varchar(255),

- created_at timestamp with time zone default CURRENT_TIMESTAMP,

- primary key(id)

-);

-

--- This table will store the tracks' info

-drop table if exists music_track cascade;

-create table music_track(

- id serial not null,

- artist varchar(255) not null,

- title varchar(255) not null,

- album varchar(255),

- created_at timestamp with time zone default CURRENT_TIMESTAMP,

- primary key(id),

- unique(artist, title)

-);

-

--- Create an index on (artist, title), and ensure that the (artist, title) pair

--- is unique

-create unique index track_artist_title_idx on music_track(lower(artist), lower(title));

-create index track_artist_idx on music_track(lower(artist));

-

--- music_activity holds the listened tracks

-drop table if exists music_activity cascade;

-create table music_activity(

- id serial not null,

- track_id int not null,

- created_at timestamp with time zone default CURRENT_TIMESTAMP,

- primary key(id)

-);

-

--- music_similar keeps track of the similar tracks

-drop table if exists music_similar cascade;

-create table music_similar(

- source_track_id int not null,

- target_track_id int not null,

- match_score float not null,

- primary key(source_track_id, target_track_id),

- foreign key(source_track_id) references music_track(id),

- foreign key(target_track_id) references music_track(id)

-);

-

--- music_discovery_playlist keeps track of the generated discovery playlists

-drop table if exists music_discovery_playlist cascade;

-create table music_discovery_playlist(

- id serial not null,

- name varchar(255),

- created_at timestamp with time zone default CURRENT_TIMESTAMP,

- primary key(id)

-);

-

--- This table contains the track included in each discovery playlist

-drop table if exists music_discovery_playlist_track cascade;

-create table music_discovery_playlist_track(

- id serial not null,

- playlist_id int not null,

- track_id int not null,

- primary key(id),

- unique(playlist_id, track_id),

- foreign key(playlist_id) references music_discovery_playlist(id),

- foreign key(track_id) references music_track(id)

-);

-

--- This table contains the new releases from artist that we've listened to at

--- least once

-drop table if exists new_release cascade;

-create table new_release(

- id serial not null,

- artist varchar(255) not null,

- album varchar(255) not null,

- genre varchar(255),

- created_at timestamp with time zone default CURRENT_TIMESTAMP,

-

- primary key(id),

- constraint u_artist_title unique(artist, album)

-);

-

--- This trigger normalizes the tracks inserted into tmp_track

-create or replace function sync_music_data()

- returns trigger as

-$$

-declare

- track_id int;

-begin

- insert into music_track(artist, title, album)

- values(new.artist, new.title, new.album)

- on conflict(artist, title) do update

- set album = coalesce(excluded.album, old.album)

- returning id into track_id;

-

- insert into music_activity(track_id, created_at)

- values (track_id, new.created_at);

-

- delete from tmp_music where id = new.id;

- return new;

-end;

-$$

-language 'plpgsql';

-

-drop trigger if exists on_sync_music on tmp_music;

-create trigger on_sync_music

- after insert on tmp_music

- for each row

- execute procedure sync_music_data();

-

--- (Optional) accessory view to easily peek the listened tracks

-drop view if exists vmusic;

-create view vmusic as

-select t.id as track_id

- , t.artist

- , t.title

- , t.album

- , a.created_at

-from music_track t

-join music_activity a

-on t.id = a.track_id;

-```

-

-Run the script on your database - if everything went smooth then all the tables

-should be successfully created.

-

-## Synchronizing your music activity

-

-Now that all the dependencies are in place, it's time to configure the logic to

-store your music activity to your database.

-

-If most of your music activity happens through mpd/mopidy, then storing your

-activity to the database is as simple as creating a hook on

-[`NewPlayingTrackEvent`

-events](https://docs.platypush.tech/platypush/events/music.html)

-that inserts any new played track on `tmp_music`. Paste the following

-content to a new Platypush user script (e.g.

-`~/.config/platypush/scripts/music/sync.py`):

-

-```python

-# ~/.config/platypush/scripts/music/sync.py

-

-from logging import getLogger

-

-from platypush.context import get_plugin

-from platypush.event.hook import hook

-from platypush.message.event.music import NewPlayingTrackEvent

-

-logger = getLogger('music_sync')

-

-# SQLAlchemy connection string that points to your database

-music_db_engine = 'postgresql+pg8000://dbuser:dbpass@dbhost/dbname'

-

-

-# Hook that react to NewPlayingTrackEvent events

-@hook(NewPlayingTrackEvent)

-def on_new_track_playing(event, **_):

- track = event.track

-

- # Skip if the track has no artist/title specified

- if not (track.get('artist') and track.get('title')):

- return

-

- logger.info(

- 'Inserting track: %s - %s',

- track['artist'], track['title']

- )

-

- db = get_plugin('db')

- db.insert(

- engine=music_db_engine,

- table='tmp_music',

- records=[

- {

- 'artist': track['artist'],

- 'title': track['title'],

- 'album': track.get('album'),

- }

- for track in tracks

- ]

- )

-```

-

-Alternatively, if you also want to sync music activity that happens on

-other clients (such as the Spotify/Tidal app or web view, or over mobile

-devices), you may consider leveraging Last.fm. Last.fm (or its open alternative

-Libre.fm) is a _scrobbling_ service compatible with most of the music

-players out there. Both Spotify and Tidal support scrobbling, the [Android

-app](https://apkpure.com/last-fm/fm.last.android) can grab any music activity

-on your phone and scrobble it, and there are even [browser

-extensions](https://chrome.google.com/webstore/detail/web-scrobbler/hhinaapppaileiechjoiifaancjggfjm?hl=en)

-that allow you to keep track of any music activity from any browser tab.

-

-So an alternative approach may be to send both your mpd/mopidy music activity,

-as well as your in-browser or mobile music activity, to last.fm / libre.fm. The

-corresponding hook would be:

-

-```python

-# ~/.config/platypush/scripts/music/sync.py

-

-from logging import getLogger

-

-from platypush.context import get_plugin

-from platypush.event.hook import hook

-from platypush.message.event.music import NewPlayingTrackEvent

-

-logger = getLogger('music_sync')

-

-

-# Hook that react to NewPlayingTrackEvent events

-@hook(NewPlayingTrackEvent)

-def on_new_track_playing(event, **_):

- track = event.track

-

- # Skip if the track has no artist/title specified

- if not (track.get('artist') and track.get('title')):

- return

-

- lastfm = get_plugin('lastfm')

- logger.info(

- 'Scrobbling track: %s - %s',

- track['artist'], track['title']

- )

-

- lastfm.scrobble(

- artist=track['artist'],

- title=track['title'],

- album=track.get('album'),

- )

-```

-

-If you go for the scrobbling way, then you may want to periodically synchronize

-your scrobble history to your local database - for example, through a cron that

-runs every 30 seconds:

-

-```python

-# ~/.config/platypush/scripts/music/scrobble2db.py

-

-import logging

-

-from datetime import datetime

-

-from platypush.context import get_plugin, Variable

-from platypush.cron import cron

-

-logger = logging.getLogger('music_sync')

-music_db_engine = 'postgresql+pg8000://dbuser:dbpass@dbhost/dbname'

-

-# Use this stored variable to keep track of the time of the latest

-# synchronized scrobble

-last_timestamp_var = Variable('LAST_SCROBBLED_TIMESTAMP')

-

-

-# This cron executes every 30 seconds

-@cron('* * * * * */30')

-def sync_scrobbled_tracks(**_):

- db = get_plugin('db')

- lastfm = get_plugin('lastfm')

-

- # Use the last.fm plugin to retrieve all the new tracks scrobbled since

- # the last check

- last_timestamp = int(last_timestamp_var.get() or 0)

- tracks = [

- track for track in lastfm.get_recent_tracks().output

- if track.get('timestamp', 0) > last_timestamp

- ]

-

- # Exit if we have no new music activity

- if not tracks:

- return

-

- # Insert the new tracks on the database

- db.insert(

- engine=music_db_engine,

- table='tmp_music',

- records=[

- {

- 'artist': track.get('artist'),

- 'title': track.get('title'),

- 'album': track.get('album'),

- 'created_at': (

- datetime.fromtimestamp(track['timestamp'])

- if track.get('timestamp') else None

- ),

- }

- for track in tracks

- ]

- )

-

- # Update the LAST_SCROBBLED_TIMESTAMP variable with the timestamp of the

- # most recent played track

- last_timestamp_var.set(max(

- int(t.get('timestamp', 0))

- for t in tracks

- ))

-

- logger.info('Stored %d new scrobbled track(s)', len(tracks))

-```

-

-This cron will basically synchronize your scrobbling history to your local

-database, so we can use the local database as the source of truth for the next

-steps - no matter where the music was played from.

-

-To test the logic, simply restart Platypush, play some music from your

-favourite player(s), and check that everything gets inserted on the database -

-even if we are inserting tracks on the `tmp_music` table, the listening history

-should be automatically normalized on the appropriate tables by the triggered

-that we created at initialization time.

-

-## Updating the suggestions

-

-Now that all the plumbing to get all of your listening history in one data

-source is in place, let's move to the logic that recalculates the suggestions

-based on your listening history.

-

-We will again use the last.fm API to get tracks that are similar to those we

-listened to recently - I personally find last.fm suggestions often more

-relevant than those of Spotify's.

-

-For sake of simplicity, let's map the database tables to some SQLAlchemy ORM

-classes, so the upcoming SQL interactions can be notably simplified. The ORM

-model can be stored under e.g. `~/.config/platypush/music/db.py`:

-

-```python

-# ~/.config/platypush/scripts/music/db.py

-

-from sqlalchemy import create_engine

-from sqlalchemy.ext.automap import automap_base

-from sqlalchemy.orm import sessionmaker, scoped_session

-

-music_db_engine = 'postgresql+pg8000://dbuser:dbpass@dbhost/dbname'

-engine = create_engine(music_db_engine)

-

-Base = automap_base()

-Base.prepare(engine, reflect=True)

-Track = Base.classes.music_track

-TrackActivity = Base.classes.music_activity

-TrackSimilar = Base.classes.music_similar

-DiscoveryPlaylist = Base.classes.music_discovery_playlist

-DiscoveryPlaylistTrack = Base.classes.music_discovery_playlist_track

-NewRelease = Base.classes.new_release

-

-

-def get_db_session():

- session = scoped_session(sessionmaker(expire_on_commit=False))

- session.configure(bind=engine)

- return session()

-```

-

-Then create a new user script under e.g.

-`~/.config/platypush/scripts/music/suggestions.py` with the following content:

-

-```python

-# ~/.config/platypush/scripts/music/suggestions.py

-

-import logging

-

-from sqlalchemy import tuple_

-from sqlalchemy.dialects.postgresql import insert

-from sqlalchemy.sql.expression import bindparam

-

-from platypush.context import get_plugin, Variable

-from platypush.cron import cron

-

-from scripts.music.db import (

- get_db_session, Track, TrackActivity, TrackSimilar

-)

-

-

-logger = logging.getLogger('music_suggestions')

-

-# This stored variable will keep track of the latest activity ID for which the

-# suggestions were calculated

-last_activity_id_var = Variable('LAST_PROCESSED_ACTIVITY_ID')

-

-

-# A cronjob that runs every 5 minutes and updates the suggestions

-@cron('*/5 * * * *')

-def refresh_similar_tracks(**_):

- last_activity_id = int(last_activity_id_var.get() or 0)

-

- # Retrieve all the tracks played since the latest synchronized activity ID

- # that don't have any similar tracks being calculated yet

- with get_db_session() as session:

- recent_tracks_without_similars = \

- _get_recent_tracks_without_similars(last_activity_id)

-

- try:

- if not recent_tracks_without_similars:

- raise StopIteration(

- 'All the recent tracks have processed suggestions')

-

- # Get the last activity_id

- batch_size = 10

- last_activity_id = (

- recent_tracks_without_similars[:batch_size][-1]['activity_id'])

-

- logger.info(

- 'Processing suggestions for %d/%d tracks',

- min(batch_size, len(recent_tracks_without_similars)),

- len(recent_tracks_without_similars))

-

- # Build the track_id -> [similar_tracks] map

- similars_by_track = {

- track['track_id']: _get_similar_tracks(track['artist'], track['title'])

- for track in recent_tracks_without_similars[:batch_size]

- }

-

- # Map all the similar tracks in an (artist, title) -> info data structure

- similar_tracks_by_artist_and_title = \

- _get_similar_tracks_by_artist_and_title(similars_by_track)

-

- if not similar_tracks_by_artist_and_title:

- raise StopIteration('No new suggestions to process')

-

- # Sync all the new similar tracks to the database

- similar_tracks = \

- _sync_missing_similar_tracks(similar_tracks_by_artist_and_title)

-

- # Link listened tracks to similar tracks

- with get_db_session() as session:

- stmt = insert(TrackSimilar).values({

- 'source_track_id': bindparam('source_track_id'),

- 'target_track_id': bindparam('target_track_id'),

- 'match_score': bindparam('match_score'),

- }).on_conflict_do_nothing()

-

- session.execute(

- stmt, [

- {

- 'source_track_id': track_id,

- 'target_track_id': similar_tracks[(similar['artist'], similar['title'])].id,

- 'match_score': similar['score'],

- }

- for track_id, similars in similars_by_track.items()

- for similar in (similars or [])

- if (similar['artist'], similar['title'])

- in similar_tracks

- ]

- )

-

- session.flush()

- session.commit()

- except StopIteration as e:

- logger.info(e)

-

- last_activity_id_var.set(last_activity_id)

- logger.info('Suggestions updated')

-

-

-def _get_similar_tracks(artist, title):

- """

- Use the last.fm API to retrieve the tracks similar to a given

- artist/title pair

- """

- import pylast

- lastfm = get_plugin('lastfm')

-

- try:

- return lastfm.get_similar_tracks(

- artist=artist,

- title=title,

- limit=10,

- )

- except pylast.PyLastError as e:

- logger.warning(

- 'Could not find tracks similar to %s - %s: %s',

- artist, title, e

- )

-

-

-def _get_recent_tracks_without_similars(last_activity_id):

- """

- Get all the tracks played after a certain activity ID that don't have

- any suggestions yet.

- """

- with get_db_session() as session:

- return [

- {

- 'track_id': t[0],

- 'artist': t[1],

- 'title': t[2],

- 'activity_id': t[3],

- }

- for t in session.query(

- Track.id.label('track_id'),

- Track.artist,

- Track.title,

- TrackActivity.id.label('activity_id'),

- )

- .select_from(

- Track.__table__

- .join(

- TrackSimilar,

- Track.id == TrackSimilar.source_track_id,

- isouter=True

- )

- .join(

- TrackActivity,

- Track.id == TrackActivity.track_id

- )

- )

- .filter(

- TrackSimilar.source_track_id.is_(None),

- TrackActivity.id > last_activity_id

- )

- .order_by(TrackActivity.id)

- .all()

- ]

-

-

-def _get_similar_tracks_by_artist_and_title(similars_by_track):

- """

- Map similar tracks into an (artist, title) -> track dictionary

- """

- similar_tracks_by_artist_and_title = {}

- for similar in similars_by_track.values():

- for track in (similar or []):

- similar_tracks_by_artist_and_title[

- (track['artist'], track['title'])

- ] = track

-

- return similar_tracks_by_artist_and_title

-

-

-def _sync_missing_similar_tracks(similar_tracks_by_artist_and_title):

- """

- Flush newly calculated similar tracks to the database.

- """

- logger.info('Syncing missing similar tracks')

- with get_db_session() as session:

- stmt = insert(Track).values({

- 'artist': bindparam('artist'),

- 'title': bindparam('title'),

- }).on_conflict_do_nothing()

-

- session.execute(stmt, list(similar_tracks_by_artist_and_title.values()))

- session.flush()

- session.commit()

-

- tracks = session.query(Track).filter(

- tuple_(Track.artist, Track.title).in_(

- similar_tracks_by_artist_and_title

- )

- ).all()

-

- return {

- (track.artist, track.title): track

- for track in tracks

- }

-```

-

-Restart Platypush and let it run for a bit. The cron will operate in batches of

-10 items each (it can be easily customized), so after a few minutes your

-`music_suggestions` table should start getting populated.

-

-## Generating the discovery playlist

-

-So far we have achieved the following targets:

-

-- We have a piece of logic that synchronizes all of our listening history to a

- local database.

-- We have a way to synchronize last.fm / libre.fm scrobbles to the same

- database as well.

-- We have a cronjob that periodically scans our listening history and fetches

- the suggestions through the last.fm API.

-

-Now let's put it all together with a cron that runs every week (or daily, or at

-whatever interval we like) that does the following:

-

-- It retrieves our listening history over the specified period.

-- It retrieves the suggested tracks associated to our listening history.

-- It excludes the tracks that we've already listened to, or that have already

- been included in previous discovery playlists.

-- It generates a new discovery playlist with those tracks, ranked according to

- a simple score:

-

-$$

-\rho_i = \sum_{j \in L_i} m_{ij}

-$$

-

-Where \( \rho_i \) is the ranking of the suggested _i_-th suggested track, \(

-L_i \) is the set of listened tracks that have the _i_-th track among its

-similarities, and \( m_{ij} \) is the match score between _i_ and _j_ as

-reported by the last.fm API.

-

-Let's put all these pieces together in a cron defined in e.g.

-`~/.config/platypush/scripts/music/discovery.py`:

-

-```python

-# ~/.config/platypush/scripts/music/discovery.py

-

-import logging

-from datetime import date, timedelta

-

-from platypush.context import get_plugin

-from platypush.cron import cron

-

-from scripts.music.db import (

- get_db_session, Track, TrackActivity, TrackSimilar,

- DiscoveryPlaylist, DiscoveryPlaylistTrack

-)

-

-logger = logging.getLogger('music_discovery')

-

-

-def get_suggested_tracks(days=7, limit=25):

- """

- Retrieve the suggested tracks from the database.

-

- :param days: Look back at the listen history for the past <n> days

- (default: 7).

- :param limit: Maximum number of track in the discovery playlist

- (default: 25).

- """

- from sqlalchemy import func

-

- listened_activity = TrackActivity.__table__.alias('listened_activity')

- suggested_activity = TrackActivity.__table__.alias('suggested_activity')

-

- with get_db_session() as session:

- return [

- {

- 'track_id': t[0],

- 'artist': t[1],

- 'title': t[2],

- 'score': t[3],

- }

- for t in session.query(

- Track.id,

- func.min(Track.artist),

- func.min(Track.title),

- func.sum(TrackSimilar.match_score).label('score'),

- )

- .select_from(

- Track.__table__

- .join(

- TrackSimilar.__table__,

- Track.id == TrackSimilar.target_track_id

- )

- .join(

- listened_activity,

- listened_activity.c.track_id == TrackSimilar.source_track_id,

- )

- .join(

- suggested_activity,

- suggested_activity.c.track_id == TrackSimilar.target_track_id,

- isouter=True

- )

- .join(

- DiscoveryPlaylistTrack,

- Track.id == DiscoveryPlaylistTrack.track_id,

- isouter=True

- )

- )

- .filter(

- # The track has not been listened

- suggested_activity.c.track_id.is_(None),

- # The track has not been suggested already

- DiscoveryPlaylistTrack.track_id.is_(None),

- # Filter by recent activity

- listened_activity.c.created_at >= date.today() - timedelta(days=days)

- )

- .group_by(Track.id)

- # Sort by aggregate match score

- .order_by(func.sum(TrackSimilar.match_score).desc())

- .limit(limit)

- .all()

- ]

-

-

-def search_remote_tracks(tracks):

- """

- Search for Tidal tracks given a list of suggested tracks.

- """

- # If you use Spotify instead of Tidal, simply replacing `music.tidal`

- # with `music.spotify` here should suffice.

- tidal = get_plugin('music.tidal')

- found_tracks = []

-

- for track in tracks:

- query = track['artist'] + ' ' + track['title']

- logger.info('Searching "%s"', query)

- results = (

- tidal.search(query, type='track', limit=1).output.get('tracks', [])

- )

-

- if results:

- track['remote_track_id'] = results[0]['id']

- found_tracks.append(track)

- else:

- logger.warning('Could not find "%s" on TIDAL', query)

-

- return found_tracks

-

-

-def refresh_discover_weekly():

- # If you use Spotify instead of Tidal, simply replacing `music.tidal`

- # with `music.spotify` here should suffice.

- tidal = get_plugin('music.tidal')

-

- # Get the latest suggested tracks

- suggestions = search_remote_tracks(get_suggested_tracks())

- if not suggestions:

- logger.info('No suggestions available')

- return

-

- # Retrieve the existing discovery playlists

- # Our naming convention is that discovery playlist names start with

- # "Discover Weekly" - feel free to change it

- playlists = tidal.get_playlists().output

- discover_playlists = sorted(

- [

- pl for pl in playlists

- if pl['name'].lower().startswith('discover weekly')

- ],

- key=lambda pl: pl.get('created_at', 0)

- )

-

- # Delete all the existing discovery playlists

- # (except the latest one). We basically keep two discovery playlists at the

- # time in our collection, so you have two weeks to listen to them before they

- # get deleted. Feel free to change this logic by modifying the -1 parameter

- # with e.g. -2, -3 etc. if you want to store more discovery playlists.

- for playlist in discover_playlists[:-1]:

- logger.info('Deleting playlist "%s"', playlist['name'])

- tidal.delete_playlist(playlist['id'])

-

- # Create a new discovery playlist

- playlist_name = f'Discover Weekly [{date.today().isoformat()}]'

- pl = tidal.create_playlist(playlist_name).output

- playlist_id = pl['id']

-

- tidal.add_to_playlist(

- playlist_id,

- [t['remote_track_id'] for t in suggestions],

- )

-

- # Add the playlist to the database

- with get_db_session() as session:

- pl = DiscoveryPlaylist(name=playlist_name)

- session.add(pl)

- session.flush()

- session.commit()

-

- # Add the playlist entries to the database

- with get_db_session() as session:

- for track in suggestions:

- session.add(

- DiscoveryPlaylistTrack(

- playlist_id=pl.id,

- track_id=track['track_id'],

- )

- )

-

- session.commit()

-

- logger.info('Discover Weekly playlist updated')

-

-

-@cron('0 6 * * 1')

-def refresh_discover_weekly_cron(**_):

- """

- This cronjob runs every Monday at 6 AM.

- """

- try:

- refresh_discover_weekly()

- except Exception as e:

- logger.exception(e)

-

- # (Optional) If anything went wrong with the playlist generation, send

- # a notification over ntfy

- ntfy = get_plugin('ntfy')

- ntfy.send_message(

- topic='mirrored-notifications-topic',

- title='Discover Weekly playlist generation failed',

- message=str(e),

- priority=4,

- )

-```

-

-You can test the cronjob without having to wait for the next Monday through

-your Python interpreter:

-

-```python

->>> import os

->>>

->>> # Move to the Platypush config directory

->>> path = os.path.join(os.path.expanduser('~'), '.config', 'platypush')

->>> os.chdir(path)

->>>

->>> # Import and run the cron function

->>> from scripts.music.discovery import refresh_discover_weekly_cron

->>> refresh_discover_weekly_cron()

-```

-

-If everything went well, you should soon see a new playlist in your collection

-named _Discover Weekly [date]_. Congratulations!

-

-## Release radar playlist

-

-Another great feature of Spotify and Tidal is the ability to provide "release

-radar" playlists that contain new releases from artists that we may like.

-

-We now have a powerful way of creating such playlists ourselves though. We

-previously configured Platypush to subscribe to the RSS feed from

-newalbumreleases.net. Populating our release radar playlist involves the

-following steps:

-

-1. Creating a hook that reacts to [`NewFeedEntryEvent`

- events](https://docs.platypush.tech/platypush/events/rss.html) on this feed.

-2. The hook will store new releases that match artists in our collection on the

- `new_release` table that we created when we initialized the database.

-3. A cron will scan this table on a weekly basis, search the tracks on

- Spotify/Tidal, and populate our playlist just like we did for _Discover

- Weekly_.

-

-Let's put these pieces together in a new user script stored under e.g.

-`~/.config/platypush/scripts/music/releases.py`:

-

-```python

-# ~/.config/platypush/scripts/music/releases.py

-

-import html

-import logging

-import re

-import threading

-from datetime import date, timedelta

-from typing import Iterable, List

-

-from platypush.context import get_plugin

-from platypush.cron import cron

-from platypush.event.hook import hook

-from platypush.message.event.rss import NewFeedEntryEvent

-

-from scripts.music.db import (

- music_db_engine, get_db_session, NewRelease

-)

-

-

-create_lock = threading.RLock()

-logger = logging.getLogger(__name__)

-

-

-def _split_html_lines(content: str) -> List[str]:

- """

- Utility method used to convert and split the HTML lines reported

- by the RSS feed.

- """

- return [

- l.strip()

- for l in re.sub(

- r'(</?p[^>]*>)|(<br\s*/?>)',

- '\n',

- content

- ).split('\n') if l

- ]

-

-

-def _get_summary_field(title: str, lines: Iterable[str]) -> str | None:

- """

- Parse the fields of a new album from the feed HTML summary.

- """

- for line in lines:

- m = re.match(rf'^{title}:\s+(.*)$', line.strip(), re.IGNORECASE)

- if m:

- return html.unescape(m.group(1))

-

-

-@hook(NewFeedEntryEvent, feed_url='https://newalbumreleases.net/category/cat/feed/')

-def save_new_release(event: NewFeedEntryEvent, **_):

- """

- This hook is triggered whenever the newalbumreleases.net has new entries.

- """

- # Parse artist and album

- summary = _split_html_lines(event.summary)

- artist = _get_summary_field('artist', summary)

- album = _get_summary_field('album', summary)

- genre = _get_summary_field('style', summary)

-

- if not (artist and album):

- return

-

- # Check if we have listened to this artist at least once

- db = get_plugin('db')

- num_plays = int(

- db.select(

- engine=music_db_engine,

- query=

- '''

- select count(*)

- from music_activity a

- join music_track t

- on a.track_id = t.id

- where artist = :artist

- ''',

- data={'artist': artist},

- ).output[0].get('count', 0)

- )

-

- # If not, skip it

- if not num_plays:

- return

-

- # Insert the new release on the database

- with create_lock:

- db.insert(

- engine=music_db_engine,

- table='new_release',

- records=[{

- 'artist': artist,

- 'album': album,

- 'genre': genre,

- }],

- key_columns=('artist', 'album'),

- on_duplicate_update=True,

- )

-

-

-def get_new_releases(days=7):

- """

- Retrieve the new album releases from the database.

-

- :param days: Look at albums releases in the past <n> days

- (default: 7)

- """

- with get_db_session() as session:

- return [

- {

- 'artist': t[0],

- 'album': t[1],

- }

- for t in session.query(

- NewRelease.artist,

- NewRelease.album,

- )

- .select_from(

- NewRelease.__table__

- )

- .filter(

- # Filter by recent activity

- NewRelease.created_at >= date.today() - timedelta(days=days)

- )

- .all()

- ]

-

-

-def search_tidal_new_releases(albums):

- """

- Search for Tidal albums given a list of objects with artist and title.

- """

- tidal = get_plugin('music.tidal')

- expanded_tracks = []

-

- for album in albums:

- query = album['artist'] + ' ' + album['album']

- logger.info('Searching "%s"', query)

- results = (

- tidal.search(query, type='album', limit=1)

- .output.get('albums', [])

- )

-

- if results:

- album = results[0]

-

- # Skip search results older than a year - some new releases may

- # actually be remasters/re-releases of existing albums

- if date.today().year - album.get('year', 0) > 1:

- continue

-

- expanded_tracks += (

- tidal.get_album(results[0]['id']).

- output.get('tracks', [])

- )

- else:

- logger.warning('Could not find "%s" on TIDAL', query)

-

- return expanded_tracks

-

-

-def refresh_release_radar():

- tidal = get_plugin('music.tidal')

-

- # Get the latest releases

- tracks = search_tidal_new_releases(get_new_releases())

- if not tracks:

- logger.info('No new releases found')

- return

-

- # Retrieve the existing new releases playlists

- playlists = tidal.get_playlists().output

- new_releases_playlists = sorted(

- [

- pl for pl in playlists

- if pl['name'].lower().startswith('new releases')

- ],

- key=lambda pl: pl.get('created_at', 0)

- )

-

- # Delete all the existing new releases playlists

- # (except the latest one)

- for playlist in new_releases_playlists[:-1]:

- logger.info('Deleting playlist "%s"', playlist['name'])

- tidal.delete_playlist(playlist['id'])

-

- # Create a new releases playlist

- playlist_name = f'New Releases [{date.today().isoformat()}]'

- pl = tidal.create_playlist(playlist_name).output

- playlist_id = pl['id']

-

- tidal.add_to_playlist(

- playlist_id,

- [t['id'] for t in tracks],

- )

-

-

-@cron('0 7 * * 1')

-def refresh_release_radar_cron(**_):

- """

- This cron will execute every Monday at 7 AM.

- """

- try:

- refresh_release_radar()

- except Exception as e:

- logger.exception(e)

- get_plugin('ntfy').send_message(

- topic='mirrored-notifications-topic',

- title='Release Radar playlist generation failed',

- message=str(e),

- priority=4,

- )

-```

-

-Just like in the previous case, it's quite easy to test that it works by simply

-running `refresh_release_radar_cron` in the Python interpreter. Just like in

-the case of the discovery playlist, things will work also if you use Spotify

-instead of Tidal - just replace the `music.tidal` plugin references with

-`music.spotify`.

-

-If it all goes as expected, you will get a new playlist named _New Releases

-[date]_ every Monday with the new releases from artist that you have listened.

-

-## Conclusions

-

-Music junkies have the opportunity to discover a lot of new music today without

-ever leaving their music app. However, smart playlists provided by the major

-music cloud providers are usually implicit lock-ins, and the way they select

-the tracks that should end up in your playlists may not even be transparent, or

-even modifiable.

-

-After reading this article, you should be able to generate your discovery and

-new releases playlists, without relying on the suggestions from a specific

-music cloud. This could also make it easier to change your music provider: even

-if you decide to drop Spotify or Tidal, your music suggestions logic will

-follow you whenever you decide to go.

diff --git a/markdown/Build-your-customizable-voice-assistant-with-Platypush.md b/markdown/Build-your-customizable-voice-assistant-with-Platypush.md

index 15c68e1..a754c60 100644

--- a/markdown/Build-your-customizable-voice-assistant-with-Platypush.md

+++ b/markdown/Build-your-customizable-voice-assistant-with-Platypush.md

@@ -95,7 +95,7 @@ First things first: in order to get your assistant working you’ll need:

I’ll also assume that you have already installed Platypush on your device — the instructions are provided on

the [Github page](https://git.platypush.tech/platypush/platypush), on

-the [wiki](https://git.platypush.tech/platypush/platypush/wiki/Home#installation) and in

+the [wiki](https://git.platypush.tech/platypush/platypush/-/wikis/home#installation) and in

my [previous article](https://blog.platypush.tech/article/Ultimate-self-hosted-automation-with-Platypush).

Follow these steps to get the assistant running:

diff --git a/markdown/Building-a-better-digital-reading-experience.md b/markdown/Building-a-better-digital-reading-experience.md

deleted file mode 100644

index 2a414b0..0000000

--- a/markdown/Building-a-better-digital-reading-experience.md

+++ /dev/null

@@ -1,786 +0,0 @@

-[//]: # (title: Building a better digital reading experience)

-[//]: # (description: Bypass client-side restrictions on news and blog articles, archive them and read them on any offline reader)

-[//]: # (image: https://s3.platypush.tech/static/images/reading-experience.jpg)

-[//]: # (author: Fabio Manganiello <fabio@manganiello.tech>)

-[//]: # (published: 2025-06-05)

-

-I've always been an avid book reader as a kid.

-

-I liked the smell of the paper, the feeling of turning the pages, and the

-ability to read them anywhere I wanted, as well as lend them to friends and

-later share our reading experiences.

-

-As I grew and chose a career in tech and a digital-savvy lifestyle, I started

-to shift my consumption from the paper to the screen. But I *still* wanted the

-same feeling of a paper book, the same freedom of reading wherever I wanted

-without distractions, and without being constantly watched by someone who will

-recommend me other products based on what I read or how I read.

-

-I was an early support of the Amazon Kindle idea. I quickly moved most of my

-physical books to the Kindle, I became a vocal supporter of online magazines

-that also provided Kindle subscriptions, and I started to read more and more on

-e-ink devices.

-

-Then I noticed that, after an initial spike, not many magazines and blogs

-provided Kindle subscriptions or EPub versions of their articles.

-

-So nevermind - I started tinkering my way out of it and [wrote an article in

-2019](https://blog.platypush.tech/article/Deliver-articles-to-your-favourite-e-reader-using-Platypush)

-on how to use [Platypush](https://platypush.tech) with its

-[`rss`](https://docs.platypush.tech/platypush/plugins/rss.html),

-[`instapaper`](https://docs.platypush.tech/platypush/plugins/instapaper.html) and

-[`gmail`](https://docs.platypush.tech/platypush/plugins/google.mail.html)

-plugins to subscribe to RSS feeds, parse new articles, convert them to PDF and

-deliver them to my Kindle.

-

-Later I moved from Kindle to the first version of the

-[Mobiscribe](https://www.mobiscribe.com), as Amazon started to be more and more

-restrictive in its option to import and export stuff out of the Kindle. Using

-Calibre and some DRM removal tools to export articles or books I had regularly

-purchased was gradually getting more cumbersome and error-prone, and the

-Mobiscribe at that time was an interesting option because it offered a decent

-e-ink device, for a decent price, and it ran Android (an ancient version, but

-at least one that was sufficient to run [Instapaper](https://instapaper.com)

-and [KOReader](https://koreader.rocks)).

-

-That simplified things a bit because I didn't need intermediary delivery via

-email to get stuff on my Kindle or Calibre to try and pull things out of it. I

-was using Instapaper on all of my devices, included the Mobiscribe, I could

-easily scrape and push articles to it through Platypush, and I could easily

-keep track of my reading state across multiple devices.

-

-Good things aren't supposed to last though.

-

-Instapaper started to feel quite limited in its capabilities, and I didn't like

-the idea of a centralized server holding all of my saved articles. So I've

-moved to a self-hosted [Wallabag](https://wallabag.org) instance in the

-meantime - which isn't perfect, but provides a lot more customization and

-control.

-

-Moreover, more and more sites started implementing client-side restrictions for

-my scrapers - Instapaper was initially more affected, as it was much easier for

-publisher's websites to detect scraping requests coming from the same subnet,

-but slowly Wallabag too started bumping into Cloudflare screens, CAPTCHAs and

-paywalls.

-

-So the Internet Archive provided some temporary relief - I could still archive

-articles there, and then instruct my Wallabag instance to read them from the

-archived link.

-

-Except that, in the past few months, the Internet Archive has also started

-implementing anti-scraping features, and you'll most likely get a Cloudflare

-screen if you try and access an article from an external scraper.

-

-## An ethical note before continuing

-

-_Feel free to skip this part and go to the technical setup section if you

-already agree that, if buying isn't owning, then piracy isn't stealing._

-

-#### Support your creators (even when you wear your pirate hat)

-

-I _do not_ condone nor support piracy when it harms content creators.

-

-Being a content creator myself I know how hard it is to squeeze some pennies

-out of our professions or hobbies, especially in a world like the digital

-one where there are often too many intermediaries to take a share of the pie.

-

-I don't mind however harming any intermediaries that add friction to the

-process just to have a piece of the pie, stubbornly rely on unsustainable

-business models that sacrifices both the revenue of the authors and the privacy

-and freedom of the readers, and prevent me from having a raw file that I can

-download and read wherever I want just I would do with a physical book or

-magazine. It's because of those folks that the digital reading experience,

-despite all the initial promises, has become much worse than the analog one.

-So I don't see a big moral conundrum in pirating to harm those folks and get

-back my basic freedoms as a reader.

-

-But I do support creators via Patreon. I pay for subscriptions to digital

-magazines that I will anyway never read through their official app. Every now

-and then I buy physical books and magazines that I've already read and that

-I've really enjoyed, to support the authors, just like I still buy some vinyls

-of albums I really love even though I could just stream them. And I send

-one-off donations when I find that some content was particularly useful to me.

-And I'd probably support content creators even more if only more of their

-distribution channels allowed me to pay only for the digital content that I

-want to consume, if only there was a viable digital business model also for the

-occasional reader, instead of everybody trying to lock me into a Hotel

-California subscription ("_you can check out any time you like, but you can

-never leave_") just because their business managers are those folks who have

-learned how to use the hammer of the recurring revenue, and think that every

-problem in the world is a subscription nail to be hit on its head. Maybe

-micropayments could be a solution, but for now cryptobros have decided that the

-future of modern digital payments should be more like a gambling den for thugs,

-shitcoin speculators and miners, rather than a solution to directly put in

-contact content creators and consumers, bypassing all the intermediaries, and

-let consumers pay only for what they consume.

-

-#### The knowledge distribution problem

-

-I also believe that the most popular business model behind most of the

-high-quality content available online (locking people into apps and

-subscriptions in order to view the content) is detrimental for the distribution

-of knowledge in what's supposed to be the age of information. If I want to be

-exposed to diverse opinions on what's going on in different industries or

-different parts of the world, I'd probably need at least a dozen subscriptions

-and a similar number of apps on my phone, all pushing notifications, while in

-earlier generations folks could just walk into their local library or buy a

-single book or a single issue of a newspaper every now and then.

-

-I don't think that we should settle for a world where the best reports, the

-best journalism and the most insightful blog articles are locked behind

-paywalls, subscriptions and closed apps, without even a Spotify/Netflix-like

-all-you-can-eat solution being considered to lower access barriers, and all

-that's left for free is cheap disinformation on social media and AI-generated

-content. Future historians will have a very hard time deciphering what was

-going on in the world in the 2020s, because most of the high-quality content

-needed to decipher our age is locked behind some kind of technological wall.

-The companies that run those sites and build those apps will most likely be

-gone in a few years or decades. And, if publishers also keep waging war against

-folks like the Internet Archive, then future historians may really start

-looking at our age like some kind of strange hyper-connected digital dark age.

-

-#### The content consumption problem

-

-I also think that it's my right, as a reader, to be able to consume content on

-a medium without distractions - like social media buttons, ads, comments, or

-other stuff that distracts me from the main content. And, if the publisher

-doesn't provide me with a solution for that, and I have already paid for the

-content, then I should be granted the right to build such a solution myself.

-Even in an age where attention is the new currency, at least we should not try

-to grab people's attention when they're trying to read some dense content. Just

-like you wouldn't interrupt someone who's reading in a library saying "hey btw,

-I know a shop that sells exactly the kind of tea cups described in the page

-you're reading right now".

-

-And I also demand the right to access the content I've paid however I want.

-

-Do I want to export everything to Markdown or read it in ASCII art in a

-terminal? Do I want to export it to EPUB so I can read it on my e-ink device?

-Do I want to export it to PDF and email it to one of my colleagues for a research

-project, or to myself for later reference? Do I want to access it without

-having to use their tracker-ridden mobile app, or without being forced to see

-ads despite having paid for a subscription? Well, that's my business. I firmly

-believe that it's not an author's or publisher's right to dictate how I access

-the content after paying for it. Just like in earlier days nobody minded if,

-after purchasing a book, I would share it with my kids, or lend it to a friend,

-or scan it and read it on my computer, or make the copies of a few pages to

-bring to my students or my colleagues for a project, or leave it on a bench at

-the park or in a public bookshelf after reading it.

-

-If some freedoms were legally granted to me before, and now they've been taken

-away, then it's not piracy if I keep demanding those freedoms. The whole point

-of a market-based economy should be to keep the customer happy and give more

-choice and freedom, not less, as technology advances. Otherwise the market is

-probably not working as intended.

-

-#### The content ownership problem

-

-Content ownership is another issue in the current digital media economy.

-

-I'll probably no longer be able to access content I've read during my

-subscription period once my subscription expires, especially if it was only

-available through an app. In the past I could cancel my subscription to

-National Geographic at any moment, and all the copies I had purchased wouldn't

-just magically disappear from my bookshelf after paying the last bill.

-

-I'll not be able to pass on the books or magazines I've read in my lifetime to

-my kid. I'll never be able to lend them to someone else, just like I would leave

-a book I had read on a public bookshelf or a bench at the park for someone else

-to read it.

-

-In other words, buying now grants you a temporary license to access the content

-on someone else's device - you don't really own anything.

-

-So, if buying isn't owning, piracy isn't stealing.

-

-And again, to make it very clear, I'll be referring to *personal use* in this

-article. The case where you support creators through other means, but the

-distribution channel and the business models are the problem, and you just

-want your basic freedoms as a content consumer back.

-

-If however you want to share scraped articles on the Web, or even worse profit

-from access to it without sharing those profits with the creators, then you're

-*really* doing the kind of piracy I can't condone.

-

-With this out of the way, let's get our hands dirty.

-

-## The setup

-

-A high-level overview of the setup is as follows:

-

-<img alt="High-level overview of the scraper setup" src="https://s3.platypush.tech/static/images/wallabag-scraper-architecture.png" width="650px">

-

-Let's break down the building blocks of this setup:

-

-- **[Redirector](https://addons.mozilla.org/en-US/firefox/addon/redirector/)**

- is a browser extension that allows you to redirect URLs based on custom

- rules as soon as the page is loaded. This is useful to redirect paywalled

- resources to the Internet Archive, which usually stores full copies of the

- content. Even if you regularly paid for a subscription to a magazine, and you

- can read the article on the publisher's site or from their app, your Wallabag

- scraper will still be blocked if the site implements client-side restrictions

- or is protected by Cloudflare. So you need to redirect the URL to the Internet

- Archive, which will then return a copy of the article that you can scrape.

-

-- **[Platypush](https://platypush.tech)** is a Python-based general-purpose

- platform for automation that I've devoted a good chunk of the past decade

- to develop. It allows you to run actions, react to events and control devices

- and services through a unified API and Web interface, and it comes with

- [hundreds of supported integrations](https://docs.platypush.tech). We'll use

- the [`wallabag`](https://docs.platypush.tech/platypush/plugins/wallabag.html)

- plugin to push articles to your Wallabag instance, and optionally the

- [`rss`](https://docs.platypush.tech/platypush/plugins/rss.html) plugin if you

- want to programmatically subscribe to RSS feeds, scrape articles and archive

- them to Wallabag, and the

- [`ntfy`](https://docs.platypush.tech/platypush/plugins/ntfy.html) plugin to

- optionally send notifications to your mobile device when new articles are

- available.

-

-- **[Platypush Web extension](https://addons.mozilla.org/en-US/firefox/addon/platypush/)**

- is a browser extension that allows you to interact with Platypush from your

- browser, and it also provides a powerful JavaScript API that you can leverage

- to manipulate the DOM and automate tasks in the browser. It's like a

- [Greasemonkey](https://addons.mozilla.org/en-US/firefox/addon/greasemonkey/)

- or [Tampermonkey](https://addons.mozilla.org/en-US/firefox/addon/tampermonkey/)

- extension that allows you to write scripts to customize your browser

- experience, but it also allows you to interact with Platypush and leverage

- its backend capabilities. On top of that, I've also added built-in support

- for the [Mercury Parser API](https://github.com/usr42/mercury-parser) in it,

- so you can easily distill articles - similar to what Firefox does with its

- [Reader

- Mode](https://support.mozilla.org/en-US/kb/firefox-reader-view-clutter-free-web-pages),

- but in this case you can customize the layout and modify the original DOM

- directly, and the distilled content can easily be dispatched to any other

- service or application. We'll use it to:

-

- - Distill the article content from the page, removing all the

- unnecessary elements (ads, comments, etc.) and leaving only the main text

- and images.

-

- - Archive the distilled article to Wallabag, so you can read it later

- from any device that has access to your Wallabag instance.

-

-- **[Wallabag](https://wallabag.org)** is a self-hosted read-it-later

- service that allows you to save articles from the Web and read them later,

- even offline. It resembles the features of the ([recently

- defunct](https://support.mozilla.org/en-US/kb/future-of-pocket))

- [Pocket](https://getpocket.com/home). It provides a Web interface, mobile

- apps and browser extensions to access your saved articles, and it can also be

- used as a backend for scraping articles from the Web.

-

-- (_Optional_) **[KOReader](https://koreader.rocks)** is an

- open-source e-book reader that runs on a variety of devices, including any

- e-ink readers that run Android (and even the

- [Remarkable](https://github.com/koreader/koreader/wiki/Installation-on-Remarkable)).

- It has a quite minimal interface and it may take a while to get used to, but

- it's extremely powerful and customizable. I personally prefer it over the

- official Wallabag app - it has a native Wallabag integration, as well as OPDS

- integration to synchronize with my

- [Ubooquity](https://docs.linuxserver.io/images/docker-ubooquity/) server,

- synchronization of highlights and notes to Nextcloud Notes, WebDAV support

- (so you can access anything hosted on e.g. your Nextcloud instance), progress

- sync across devices through their [sync

- server](https://github.com/koreader/koreader-sync-server), and much more. It

- basically gives you a single app to access your saved articles, your books,

- your notes, your highlights, and your documents.

-

-- (_Optional_) An Android-based e-book reader to run KOReader on. I have

- recently switched from my old Mobiscribe to an [Onyx BOOX Note Air

- 4](https://www.onyxbooxusa.com/onyx-boox-note-air4-c) and I love it. It's

- powerful, the display is great, it runs basically any Android app out there

- (and I've had no issues with running any apps installed through

- [F-Droid](https://f-droid.org)), and it also has a good set of stock apps,

- and most of them support WebDAV synchronization - ideal if you have a

- [Nextcloud](https://nextcloud.com) instance to store your documents and

- archived links.

-

-**NOTE**: The Platypush extension only works with Firefox, on any Firefox-based

-browser, or on any browser out there that still supports the [Manifest

-V2](https://blog.mozilla.org/addons/2024/03/13/manifest-v3-manifest-v2-march-2024-update/).

-The Manifest V3 has been a disgrace that Google has forced all browser

-extension developers to swallow. I won't go in detail here, but the Platypush

-extension needs to be able to perform actions (such as calls to custom remote

-endpoints and runtime interception of HTTP headers) that are either no longer

-supported on Manifest V3, or that are only supported through laborious

-workarounds (such as using the declarative Net Request API to explicitly

-define what you want to intercept and what remote endpoints you want to call).

-

-**NOTE 2**: As of June 2025, the Platypush extension is only supported on

-Firefox for desktop. A Firefox for Android version [is

-work in progress](https://git.platypush.tech/platypush/platypush-webext/issues/1).

-

-Let's dig deeper into the individual components of this setup.

-

-## Redirector

-

-

-

-This is a nice addition if you want to automatically view some links through

-the Internet Archive rather than the original site.

-

-You can install it from the [Firefox Add-ons site](https://addons.mozilla.org/en-US/firefox/addon/redirector/).

-Once installed, you can create a bunch of rules (regular expressions are supported)

-to redirect URLs from paywalled domains that you visit often to the Internet Archive.

-

-For example, this regular expression:

-

-```

-^(https://([\w-]+).substack.com/p/.*)

-```

-

-will match any Substack article URL, and you can redirect it to the Internet Archive

-through this URL:

-

-```

-https://archive.is/$1

-```

-

-Next time you open a Substack article, it will be automatically redirected to its

-most recent archived version - or it will prompt you to archive the URL if it's not

-been archived yet.

-

-## Wallabag

-

-

-

-Wallabag can easily be installed on any server [through Docker](https://doc.wallabag.org/developer/docker/).

-

-Follow the documentation for the set up of your user and create an API token

-from the Web interface.

-

-It's also advised to [set up a reverse

-proxy](https://doc.wallabag.org/admin/installation/virtualhosts/#configuration-on-nginx)

-in front of Wallabag, so you can easily access it over HTTPS.

-

-Once configured the reverse proxy, you can generate a certificate for it - for

-example, if you use [`certbot`](https://certbot.eff.org/) and `nginx`:

-

-```bash

-❯ certbot --nginx -d your-domain.com

-```

-

-Then you can access your Wallabag instance at `https://your-domain.com` and log

-in with the user you created.

-

-Bonus: I personally find the Web interface of Wallabag quite ugly - the

-fluorescent light blue headers are distracting and the default font and column

-width isn't ideal for my taste. So I made a [Greasemonkey/Tampermonkey

-script](https://gist.manganiello.tech/fabio/ec9e28170988441d9a091b3fa6535038)

-to make it better if you want (see screenshot above).

-

-## [_Optional_] ntfy

-

-[ntfy](https://ntfy.sh) is a simple HTTP-based pub/sub notification service

-that you can use to send notifications to your devices or your browser. It

-provides both an [Android app](https://f-droid.org/en/packages/io.heckel.ntfy/)

-and a [browser

-addon](https://addons.mozilla.org/en-US/firefox/addon/send-to-ntfy/) to send

-and receive notifications, allowing you to open saved links directly on your

-phone or any other device subscribed to the same topic.

-

-Running it via docker-compose [is quite

-straightforward](https://github.com/binwiederhier/ntfy/blob/main/docker-compose.yml).

-

-It's also advised to serve it behind a reverse proxy with HTTPS support,

-keeping in mind to set the right header for the Websocket paths - example nginx

-configuration:

-

-```nginx

-map $http_upgrade $connection_upgrade {

- default upgrade;

- '' close;

-}

-

-server {

- server_name notify.example.com;

-

- location / {

- proxy_pass http://your-internal-ntfy-host:port;

-

- client_max_body_size 5M;

-

- proxy_read_timeout 60;

- proxy_connect_timeout 60;

- proxy_redirect off;

-

- proxy_set_header Host $http_host;

- proxy_set_header X-Real-IP $remote_addr;

- proxy_set_header X-Forwarded-Ssl on;

- proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

- }

-

- location ~ .*/ws/?$ {

- proxy_http_version 1.1;

- proxy_set_header Upgrade $http_upgrade;

- proxy_set_header Connection $connection_upgrade;

- proxy_set_header Host $http_host;

- proxy_pass http://your-internal-ntfy-host:port;

- }

-}

-```

-

-Once the server is running, you can check the connectivity by opening your

-server's main page in your browser.

-

-**NOTE**: Be _careful_ when choosing your ntfy topic name, especially if you

-are using a public instance. ntfy by default doesn't require any authentication

-for publishing or subscribing to a topic. So choose a random name (or at least

-a random prefix/suffix) for your topics and treat them like a password.

-

-## Platypush

-

-Create a new virtual environment and install Platypush through `pip` (the

-plugins we'll use in the first part don't require any additional dependencies):

-

-```bash

-❯ python3 -m venv venv

-❯ source venv/bin/activate

-❯ pip install platypush

-```

-

-Then create a new configuration file `~/.config/platypush/config.yaml` with the

-following configuration:

-

-```yaml

-# Web server configuration

-backend.http:

- # port: 8008

-

-# Wallabag configuration

-wallabag:

- server_url: https://your-domain.com

- client_id: your_client_id

- client_secret: your_client_secret

- # Your Wallabag user credentials are required for the first login.

- # It's also advised to keep them here afterwards so the refresh

- # token can be automatically updated.

- username: your_username

- password: your_password

-```

-

-Then you can start the service with:

-

-```bash

-❯ platypush

-```

-

-You can also create a systemd service to run Platypush in the background:

-

-```bash

-❯ mkdir -p ~/.config/systemd/user

-❯ cat <<EOF > ~/.config/systemd/user/platypush.service

-[Unit]

-Description=Platypush service

-After=network.target

-

-[Service]

-ExecStart=/path/to/venv/bin/platypush

-Restart=always

-RestartSec=5

-EOF

-❯ systemctl --user daemon-reload

-❯ systemctl --user enable --now platypush.service

-```

-

-After starting the service, head over to `http://your_platypush_host:8008` (or

-the port you configured in the `backend.http` section) and create a new user

-account.

-

-It's also advised to serve the Platypush Web server behind a reverse proxy with

-HTTPS support if you want it to easily be accessible from the browser extension -

-a basic `nginx` configuration [is available on the

-repo](https://git.platypush.tech/platypush/platypush/src/branch/master/examples/nginx/nginx.sample.conf).

-

-## Platypush Web extension

-

-You can install the Platypush Web extension from the [Firefox Add-ons

-site](https://addons.mozilla.org/en-US/firefox/addon/platypush/).

-

-After installing it, click on the extension popup and add the URL of your

-Platypush Web server.

-

-

-

-When successfully connected, you should see the device in the main menu, you

-can run commands on it and save actions.

-

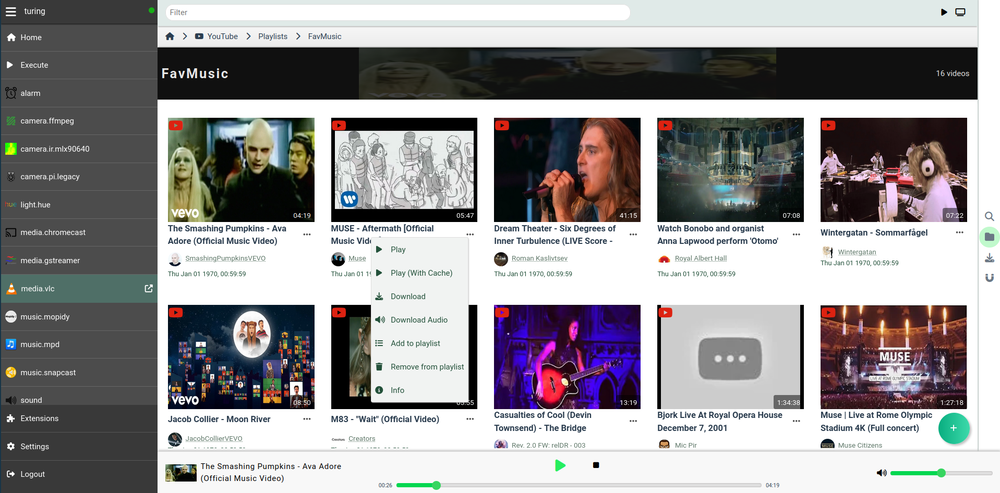

-A good place to start familiarizing with the Platypush API is the _Run Action_

-dialog, which allows you to run commands on your server and provides

-autocomplete for the available actions, as well as documentation about their

-arguments.

-

-

-

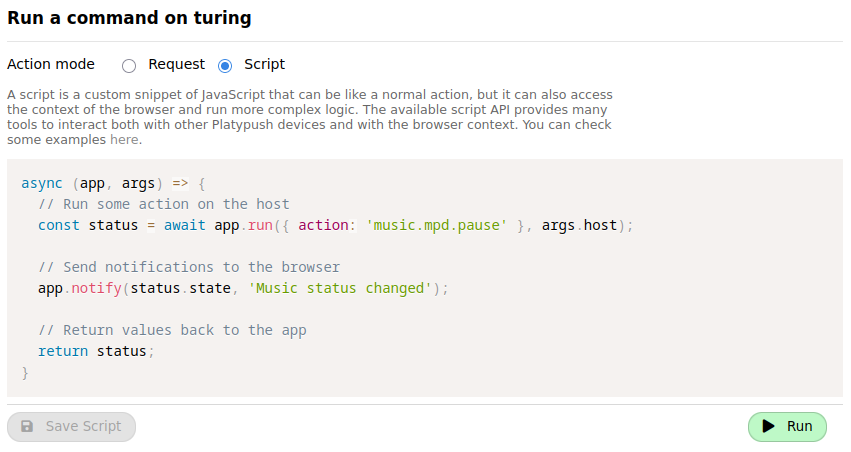

-The default action mode is _Request_ (i.e. single requests against the API).

-You can also pack together more actions on the backend [into

-_procedures_](https://docs.platypush.tech/wiki/Quickstart.html#greet-me-with-lights-and-music-when-i-come-home),

-which can be written either in the YAML config or as Python scripts (by default

-loaded from `~/.config/platypush/scripts`). If correctly configured, procedures

-will be available in the _Run Action_ dialog.

-

-The other mode, which we'll use in this article, is _Script_. In this mode you

-can write custom JavaScript code that can interact with your browser.

-

-

-

-[Here](https://gist.github.com/BlackLight/d80c571705215924abc06a80994fd5f4) is

-a sample script that you can use as a reference for the API exposed by the

-extension. Some examples include:

-

-- `app.run`, to run an action on the Platypush backend

-

-- `app.getURL`, `app.setURL` and `app.openTab` to get and set the current URL,

- or open a new tab with a given URL

-

-- `app.axios.get`, `app.axios.post` etc. to perform HTTP requests to other

- external services through the Axios library

-

-- `app.getDOM` and `app.setDOM` to get and set the current page DOM

-

-- `app.mercury.parse` to distill the current page content using the Mercury

- Parser API

-

-### Reader Mode script

-

-We can put together the building blocks above to create our first script, which

-will distill the current page content and swap the current page DOM with the

-simplified content - with no ads, comments, or other distracting visual

-elements. The full content of the script is available

-[here](https://gist.manganiello.tech/fabio/c731b57ff6b24d21a8f43fbedde3dc30).

-

-This is akin to what Firefox' [Reader

-Mode](https://support.mozilla.org/en-US/kb/firefox-reader-view-clutter-free-web-pages)

-does, but with much more room for customization.

-

-Note that for this specific script we don't need any interactions with the

-Platypush backend. Everything happens on the client, as the Mercury API is

-built into the Platypush Web extension.

-

-Switch to _Script_ mode in the _Run Action_ dialog, paste the script content

-and click on _Save Script_. You can also choose a custom name, icon

-([FontAwesome](https://fontawesome.com/icons) icon classes are supported),

-color and group for the script. Quite importantly, you can also associate a

-keyboard shortcut to it, so you can quickly distill a page without having to

-search for the command either in the extension popup or in the context menu.

-

-### Save to Wallabag script

-

-Now that we have a script to distill the current page content, we can create

-another script to save the distilled content (if available) to Wallabag.

-Otherwise, it will just save the original page content.

-

-The full content of the script is available

-[here](https://gist.manganiello.tech/fabio/8f5b08d8fbaa404bafc6fdeaf9b154b4).

-The structure is quite straightforward:

-

-- First, it checks if the page content has already been "distilled" by the