3rd article migration WIP

This commit is contained in:

parent

745f3b7b9c

commit

4d32eca17c

3 changed files with 269 additions and 0 deletions

BIN

static/img/people-detect-2.png

Normal file

BIN

static/img/people-detect-2.png

Normal file

Binary file not shown.

|

After

(image error) Size: 87 KiB |

BIN

static/img/people-detect-3.png

Normal file

BIN

static/img/people-detect-3.png

Normal file

Binary file not shown.

|

After

(image error) Size: 39 KiB |

|

|

@ -166,3 +166,272 @@ it shouldn’t be an issue for your model training purposes.

|

||||||

|

|

||||||

If you open the web panel (`http://your-host:8008`) you’ll also notice a new tab, represented by the sun icon, that you

|

If you open the web panel (`http://your-host:8008`) you’ll also notice a new tab, represented by the sun icon, that you

|

||||||

can use to monitor your camera from a web interface.

|

can use to monitor your camera from a web interface.

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

You can also monitor the camera directly outside of the webpanel by pointing your browser to

|

||||||

|

`http://your-host:8008/camera/ir/mlx90640/stream?rotate=270&scale_factor=20`.

|

||||||

|

|

||||||

|

Now add a cronjob to your `config.yaml` to take snapshots every minute:

|

||||||

|

|

||||||

|

```yaml

|

||||||

|

cron.ThermalCameraSnapshotCron:

|

||||||

|

cron_expression: '* * * * *'

|

||||||

|

actions:

|

||||||

|

- action: camera.ir.mlx90640.capture

|

||||||

|

args:

|

||||||

|

output_file: "${__import__(’datetime’).datetime.now().strftime(’/your/img/folder/%Y-%m-%d_%H-%M-%S.jpg’)}"

|

||||||

|

grayscale: true

|

||||||

|

```

|

||||||

|

|

||||||

|

Or directly as a Python script under e.g. `~/.config/platypush/thermal.py` (make sure that `~/.config/platypush/__init__.py` also exists so the folder is recognized as a Python module):

|

||||||

|

|

||||||

|

```python

|

||||||

|

from datetime import datetime

|

||||||

|

|

||||||

|

from platypush.config import Config

|

||||||

|

from platypush.cron import cron

|

||||||

|

from platypush.utils import run

|

||||||

|

|

||||||

|

|

||||||

|

@cron('* * * * *')

|

||||||

|

def take_thermal_picture(**context):

|

||||||

|

run('camera.ir.mlx90640.capture', grayscale=True,

|

||||||

|

output_file=datetime.now().strftime('/your/img/folder/%Y-%m-%d_%H-%m-%S.jpg'))

|

||||||

|

```

|

||||||

|

|

||||||

|

The images will be stored under `/your/img/folder` in the format

|

||||||

|

`YYYY-mm-dd_HH-MM-SS.jpg`. No scale factor is applied — even if the images will

|

||||||

|

be tiny we’ll only need them to train our model. Also, we’ll convert the images

|

||||||

|

to grayscale — the neural network will be lighter and actually more accurate,

|

||||||

|

as it will only have to rely on one variable per pixel without being tricked by

|

||||||

|

RGB combinations.

|

||||||

|

|

||||||

|

Restart Platypush and verify that every minute a new picture is created under

|

||||||

|

your images directory. Let it run for a few hours or days until you’re happy

|

||||||

|

with the number of samples. Try to balance the numbers of pictures with no

|

||||||

|

people in the room and those with people in the room, trying to cover as many

|

||||||

|

cases as possible — e.g. sitting, standing in different points of the room etc.

|

||||||

|

As I mentioned earlier, in my case I only needed less than 1000 pictures with

|

||||||

|

enough variety to achieve accuracy levels above 99%.

|

||||||

|

|

||||||

|

## Labelling phase

|

||||||

|

|

||||||

|

Once you’re happy with the number of samples you’ve taken, copy the images over

|

||||||

|

to the machine you’ll be using to train your model (they should be all small

|

||||||

|

JPEG files weighing under 500 bytes each). Copy them to the folder where you

|

||||||

|

have cloned my `imgdetect-utils` repository:

|

||||||

|

|

||||||

|

```shell

|

||||||

|

BASEDIR=~/git_tree/imgdetect-utils

|

||||||

|

|

||||||

|

# This directory will contain your raw images

|

||||||

|

IMGDIR=$BASEDIR/datasets/ir/images

|

||||||

|

|

||||||

|

# This directory will contain the raw numpy training

|

||||||

|

# data parsed from the images

|

||||||

|

DATADIR=$BASEDIR/datasets/ir/data

|

||||||

|

|

||||||

|

mkdir -p $IMGDIR

|

||||||

|

mkdir -p $DATADIR

|

||||||

|

|

||||||

|

# Copy the images

|

||||||

|

scp pi@raspberry:/your/img/folder/*.jpg $IMGDIR

|

||||||

|

|

||||||

|

# Create the labels for the images. Each label is a

|

||||||

|

# directory under $IMGDIR

|

||||||

|

mkdir $IMGDIR/negative

|

||||||

|

mkdir $IMGDIR/positive

|

||||||

|

```

|

||||||

|

|

||||||

|

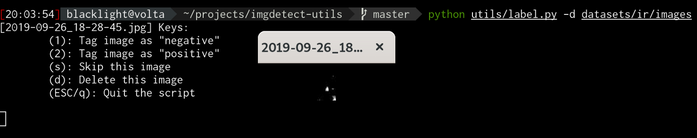

Once the images have been copied and the directories for the labels created,

|

||||||

|

run the `label.py` script provided in the repository to interactively label the

|

||||||

|

images:

|

||||||

|

|

||||||

|

```shell

|

||||||

|

cd $BASEDIR

|

||||||

|

python utils/label.py -d $IMGDIR --scale-factor 10

|

||||||

|

```

|

||||||

|

|

||||||

|

Each image will open in a new window and you can label it by typing either 1

|

||||||

|

(negative) or 2 (positive) - the label names are gathered from the names of the

|

||||||

|

directories you created at the previous step:

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

At the end of the procedure the `negative` and `positive` directories under the

|

||||||

|

images directory should have been populated.

|

||||||

|

|

||||||

|

## Training phase

|

||||||

|

|

||||||

|

Once we’ve got all the labelled images it’s time to train our model. A

|

||||||

|

[`train.ipynb`](https://github.com/BlackLight/imgdetect-utils/blob/master/notebooks/ir/train.ipynb)

|

||||||

|

Jupyter notebook is provided under `notebooks/ir` and it should be

|

||||||

|

relatively self-explanatory:

|

||||||

|

|

||||||

|

```python

|

||||||

|

### Import stuff

|

||||||

|

|

||||||

|

import os

|

||||||

|

import sys

|

||||||

|

|

||||||

|

import numpy as np

|

||||||

|

|

||||||

|

import tensorflow as tf

|

||||||

|

from tensorflow import keras

|

||||||

|

|

||||||

|

######

|

||||||

|

# Change this with the directory where you cloned the imgdetect-utils repo

|

||||||

|

basedir = os.path.join(os.path.expanduser('~'), 'git_tree', 'imgdetect-utils')

|

||||||

|

sys.path.append(os.path.join(basedir))

|

||||||

|

|

||||||

|

from src.image_helpers import plot_images_grid, create_dataset_files

|

||||||

|

from src.train_helpers import load_data, plot_results, export_model

|

||||||

|

|

||||||

|

# Define the dataset directory - replace it with the path on your local

|

||||||

|

# machine where you have stored the previously labelled dataset.

|

||||||

|

dataset_dir = os.path.join(basedir, 'datasets', 'ir')

|

||||||

|

|

||||||

|

# Define the size of the input images. In the case of an

|

||||||

|

# MLX90640 it will be (24, 32) for horizontal images and

|

||||||

|

# (32, 24) for vertical images

|

||||||

|

image_size = (32, 24)

|

||||||

|

|

||||||

|

# Image generator batch size

|

||||||

|

batch_size = 64

|

||||||

|

|

||||||

|

# Number of training epochs

|

||||||

|

epochs = 5

|

||||||

|

######

|

||||||

|

|

||||||

|

# The Tensorflow model and properties file will be stored here

|

||||||

|

tf_model_dir = os.path.join(basedir, 'models', 'ir', 'tensorflow')

|

||||||

|

tf_model_file = os.path.join(tf_model_dir, 'ir.pb')

|

||||||

|

tf_properties_file = os.path.join(tf_model_dir, 'ir.json')

|

||||||

|

|

||||||

|

# Base directory that contains your training images and dataset files

|

||||||

|

dataset_base_dir = os.path.join(basedir, 'datasets', 'ir')

|

||||||

|

dataset_dir = os.path.join(dataset_base_dir, 'data')

|

||||||

|

|

||||||

|

# Store your thermal camera images here

|

||||||

|

img_dir = os.path.join(dataset_base_dir, 'images')

|

||||||

|

|

||||||

|

### Create model directories

|

||||||

|

|

||||||

|

os.makedirs(tf_model_dir, mode=0o775, exist_ok=True)

|

||||||

|

|

||||||

|

### Create a dataset files from the available images

|

||||||

|

|

||||||

|

dataset_files = create_dataset_files(img_dir, dataset_dir,

|

||||||

|

split_size=1000,

|

||||||

|

num_threads=1,

|

||||||

|

resize=input_size)

|

||||||

|

|

||||||

|

### Or load existing .npz dataset files

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

dataset_files = [os.path.join(dataset_dir, f)

|

||||||

|

for f in os.listdir(dataset_dir)

|

||||||

|

if os.path.isfile(os.path.join(dataset_dir, f))

|

||||||

|

and f.endswith('.npz')]

|

||||||

|

|

||||||

|

### Get the training and test set randomly out of the dataset with a split of 70/30

|

||||||

|

|

||||||

|

train_set, test_set, classes = load_data(*dataset_files, split_percentage=0.7)

|

||||||

|

print('Loaded {} training images and {} test images. Classes: {}'.format(

|

||||||

|

train_set.shape[0], test_set.shape[0], classes))

|

||||||

|

|

||||||

|

# Example output:

|

||||||

|

# Loaded 623 training images and 267 test images. Classes: ['negative' 'positive']

|

||||||

|

|

||||||

|

# Extract training set and test set images and labels

|

||||||

|

train_images = np.asarray([item[0] for item in train_set])

|

||||||

|

train_labels = np.asarray([item[1] for item in train_set])

|

||||||

|

test_images = np.asarray([item[0] for item in test_set])

|

||||||

|

test_labels = np.asarray([item[1] for item in test_set])

|

||||||

|

|

||||||

|

### Inspect the first 25 images in the training set

|

||||||

|

|

||||||

|

plot_images_grid(images=train_images, labels=train_labels,

|

||||||

|

classes=classes, rows=5, cols=5)

|

||||||

|

|

||||||

|

### Declare the model

|

||||||

|

|

||||||

|

# - Flatten input

|

||||||

|

# - Layer 1: 50% the number of pixels per image (RELU activation)

|

||||||

|

# - Layer 2: 20% the number of pixels per image (RELU activation)

|

||||||

|

# - Layer 3: as many neurons as the output labels

|

||||||

|

# (in this case 2: negative, positive) (Softmax activation)

|

||||||

|

|

||||||

|

model = keras.Sequential([

|

||||||

|

keras.layers.Flatten(input_shape=train_images[0].shape),

|

||||||

|

keras.layers.Dense(int(0.5 * train_images.shape[1] * train_images.shape[2]),

|

||||||

|

activation=tf.nn.relu),

|

||||||

|

keras.layers.Dense(int(0.2 * train_images.shape[1] * train_images.shape[2]),

|

||||||

|

activation=tf.nn.relu),

|

||||||

|

keras.layers.Dense(len(classes), activation=tf.nn.softmax)

|

||||||

|

])

|

||||||

|

|

||||||

|

### Compile the model

|

||||||

|

|

||||||

|

# - Loss function:This measures how accurate the model is during training. We

|

||||||

|

# want to minimize this function to "steer" the model in the right direction.

|

||||||

|

# - Optimizer: This is how the model is updated based on the data it sees and

|

||||||

|

# its loss function.

|

||||||

|

# - Metrics: Used to monitor the training and testing steps. The following

|

||||||

|

# example uses accuracy, the fraction of the images that are correctly classified.

|

||||||

|

|

||||||

|

model.compile(optimizer='adam',

|

||||||

|

loss='sparse_categorical_crossentropy',

|

||||||

|

metrics=['accuracy'])

|

||||||

|

|

||||||

|

### Train the model

|

||||||

|

|

||||||

|

model.fit(train_images, train_labels, epochs=3)

|

||||||

|

|

||||||

|

# Example output:

|

||||||

|

# Epoch 1/3 623/623 [======] - 0s 487us/sample - loss: 0.2672 - acc: 0.8860

|

||||||

|

# Epoch 2/3 623/623 [======] - 0s 362us/sample - loss: 0.0247 - acc: 0.9936

|

||||||

|

# Epoch 3/3 623/623 [======] - 0s 373us/sample - loss: 0.0083 - acc: 0.9984

|

||||||

|

|

||||||

|

### Evaluate accuracy against the test set

|

||||||

|

|

||||||

|

test_loss, test_acc = model.evaluate(test_images, test_labels)

|

||||||

|

print('Test accuracy:', test_acc)

|

||||||

|

|

||||||

|

# Example output:

|

||||||

|

# 267/267 [======] - 0s 243us/sample - loss: 0.0096 - acc: 0.9963

|

||||||

|

# Test accuracy: 0.9962547

|

||||||

|

|

||||||

|

### Make predictions on the test set

|

||||||

|

|

||||||

|

predictions = model.predict(test_images)

|

||||||

|

|

||||||

|

# Plot a grid of 36 images and show expected vs. predicted values

|

||||||

|

plot_results(images=test_images, labels=test_labels,

|

||||||

|

classes=classes, predictions=predictions,

|

||||||

|

rows=9, cols=4)

|

||||||

|

|

||||||

|

### Export as a Tensorflow model

|

||||||

|

|

||||||

|

export_model(model, tf_model_file,

|

||||||

|

properties_file=tf_properties_file,

|

||||||

|

classes=classes,

|

||||||

|

input_size=input_size)

|

||||||

|

```

|

||||||

|

|

||||||

|

If you managed to execute the whole notebook correctly you’ll have a file named

|

||||||

|

`ir.pb` under `models/ir/tensorflow`. That’s your Tensorflow model file, you can

|

||||||

|

now copy it over to the RaspberryPi and use it to do predictions:

|

||||||

|

|

||||||

|

```shell

|

||||||

|

scp $BASEDIR/models/ir/tensorflow/ir.pb pi@raspberry:/home/pi/models

|

||||||

|

```

|

||||||

|

|

||||||

|

## Detect people in the room

|

||||||

|

|

||||||

|

Once the Tensorflow model has been deployed to the RaspberryPi you can replace the

|

||||||

|

previous cronjob that stores pictures at regular intervals with a cronjob that captures

|

||||||

|

pictures and feeds them to the previously trained model

|

||||||

|

|

||||||

|

|

|

||||||

Loading…

Add table

Add a link

Reference in a new issue