Migrated third article

This commit is contained in:

parent

4d32eca17c

commit

688d0c152c

3 changed files with 284 additions and 164 deletions

BIN

static/img/people-detect-4.png

Normal file

BIN

static/img/people-detect-4.png

Normal file

Binary file not shown.

|

After

(image error) Size: 59 KiB |

BIN

static/img/people-detect-5.png

Normal file

BIN

static/img/people-detect-5.png

Normal file

Binary file not shown.

|

After

(image error) Size: 13 KiB |

|

|

@ -103,6 +103,20 @@ Install also the Python dependencies for the HTTP server, the MLX90640 plugin an

|

|||

[sudo] pip install 'platypush[http,tensorflow,mlx90640]'

|

||||

```

|

||||

|

||||

Tensorflow may also require some additional dependencies installable via `apt-get`:

|

||||

|

||||

```shell

|

||||

[sudo] apt-get install python3-numpy \

|

||||

libatlas-base-dev \

|

||||

libblas-dev \

|

||||

liblapack-dev \

|

||||

python3-dev \

|

||||

gfortran \

|

||||

python3-setuptools \

|

||||

python3-scipy \

|

||||

python3-h5py

|

||||

```

|

||||

|

||||

Heading to your computer (we'll be using it for building the model that will be used on the RaspberryPi), install

|

||||

OpenCV, Tensorflow and Jupyter and my utilities for handling images:

|

||||

|

||||

|

|

@ -119,10 +133,10 @@ OpenCV, Tensorflow and Jupyter and my utilities for handling images:

|

|||

|

||||

# Clone my repository with the image and training utilities

|

||||

# and the Jupyter notebooks that we'll use for training.

|

||||

git clone https://github.com/BlackLight/imgdetect-utils

|

||||

git clone https://github.com/BlackLight/imgdetect-utils ~/projects/imgdetect-utils

|

||||

```

|

||||

|

||||

## Capturing phase

|

||||

## Capture phase

|

||||

|

||||

Now that you’ve got all the hardware and software in place, it’s time to start capturing frames with your camera and use

|

||||

them to train your model. First, configure

|

||||

|

|

@ -152,7 +166,7 @@ curl -XPOST -H 'Content-Type: application/json' -d '

|

|||

"output_file":"~/snap.png",

|

||||

"scale_factor":20

|

||||

}

|

||||

}' -a 'username:password' http://localhost:8008/execute

|

||||

}' -u 'username:password' http://localhost:8008/execute

|

||||

```

|

||||

|

||||

If everything went well, the thermal picture should be stored under `~/snap.png`. In my case it looks like this while

|

||||

|

|

@ -180,7 +194,7 @@ cron.ThermalCameraSnapshotCron:

|

|||

actions:

|

||||

- action: camera.ir.mlx90640.capture

|

||||

args:

|

||||

output_file: "${__import__(’datetime’).datetime.now().strftime(’/your/img/folder/%Y-%m-%d_%H-%M-%S.jpg’)}"

|

||||

output_file: "${__import__(’datetime’).datetime.now().strftime(’/home/pi/datasets/people_detect/images/%Y-%m-%d_%H-%M-%S.jpg’)}"

|

||||

grayscale: true

|

||||

```

|

||||

|

||||

|

|

@ -189,7 +203,6 @@ Or directly as a Python script under e.g. `~/.config/platypush/thermal.py` (make

|

|||

```python

|

||||

from datetime import datetime

|

||||

|

||||

from platypush.config import Config

|

||||

from platypush.cron import cron

|

||||

from platypush.utils import run

|

||||

|

||||

|

|

@ -197,15 +210,14 @@ from platypush.utils import run

|

|||

@cron('* * * * *')

|

||||

def take_thermal_picture(**context):

|

||||

run('camera.ir.mlx90640.capture', grayscale=True,

|

||||

output_file=datetime.now().strftime('/your/img/folder/%Y-%m-%d_%H-%m-%S.jpg'))

|

||||

output_file=datetime.now().strftime('/home/pi/datasets/people_detect/images/%Y-%m-%d_%H-%m-%S.jpg'))

|

||||

```

|

||||

|

||||

The images will be stored under `/your/img/folder` in the format

|

||||

`YYYY-mm-dd_HH-MM-SS.jpg`. No scale factor is applied — even if the images will

|

||||

be tiny we’ll only need them to train our model. Also, we’ll convert the images

|

||||

to grayscale — the neural network will be lighter and actually more accurate,

|

||||

as it will only have to rely on one variable per pixel without being tricked by

|

||||

RGB combinations.

|

||||

The images will be stored under `/home/pi/datasets/people_detect/images` (make sure that the directory exists before starting

|

||||

the service) in the format `YYYY-mm-dd_HH-MM-SS.jpg`. No scale factor is applied — even if the images will be tiny we’ll

|

||||

only need them to train our model. Also, we’ll convert the images to grayscale — the neural network will be lighter and

|

||||

actually more accurate, as it will only have to rely on one variable per pixel without being tricked by RGB

|

||||

combinations.

|

||||

|

||||

Restart Platypush and verify that every minute a new picture is created under

|

||||

your images directory. Let it run for a few hours or days until you’re happy

|

||||

|

|

@ -219,38 +231,39 @@ enough variety to achieve accuracy levels above 99%.

|

|||

|

||||

Once you’re happy with the number of samples you’ve taken, copy the images over

|

||||

to the machine you’ll be using to train your model (they should be all small

|

||||

JPEG files weighing under 500 bytes each). Copy them to the folder where you

|

||||

have cloned my `imgdetect-utils` repository:

|

||||

JPEG files weighing under 500 bytes each). Copy them to your local machine:

|

||||

|

||||

```shell

|

||||

BASEDIR=~/git_tree/imgdetect-utils

|

||||

|

||||

# This directory will contain your raw images

|

||||

IMGDIR=$BASEDIR/datasets/ir/images

|

||||

|

||||

# This directory will contain the raw numpy training

|

||||

# data parsed from the images

|

||||

DATADIR=$BASEDIR/datasets/ir/data

|

||||

|

||||

mkdir -p $IMGDIR

|

||||

mkdir -p $DATADIR

|

||||

BASEDIR=~/datasets/people_detect

|

||||

mkdir -p "$BASEDIR"

|

||||

|

||||

# Copy the images

|

||||

scp pi@raspberry:/your/img/folder/*.jpg $IMGDIR

|

||||

scp -r pi@raspberry:/home/pi/datasets/people_detect ~

|

||||

IMGDIR="$BASEDIR/images"

|

||||

|

||||

# This directory will contain the raw numpy training

|

||||

# data parsed from the images (useful if you want to

|

||||

# re-train the model without having to reprocess all

|

||||

# the images)

|

||||

DATADIR="$BASEDIR/data"

|

||||

|

||||

mkdir -p "$IMGDIR"

|

||||

mkdir -p "$DATADIR"

|

||||

|

||||

# Create the labels for the images. Each label is a

|

||||

# directory under $IMGDIR

|

||||

mkdir $IMGDIR/negative

|

||||

mkdir $IMGDIR/positive

|

||||

mkdir "$IMGDIR/negative"

|

||||

mkdir "$IMGDIR/positive"

|

||||

```

|

||||

|

||||

Once the images have been copied and the directories for the labels created,

|

||||

Once the images have been copied, and the directories for the labels created,

|

||||

run the `label.py` script provided in the repository to interactively label the

|

||||

images:

|

||||

|

||||

```shell

|

||||

cd $BASEDIR

|

||||

python utils/label.py -d $IMGDIR --scale-factor 10

|

||||

UTILS_DIR=~/projects/imgdetect-utils

|

||||

cd "$UTILS_DIR"

|

||||

python utils/label.py -d "$IMGDIR" --scale-factor 10

|

||||

```

|

||||

|

||||

Each image will open in a new window and you can label it by typing either 1

|

||||

|

|

@ -270,27 +283,18 @@ Jupyter notebook is provided under `notebooks/ir` and it should be

|

|||

relatively self-explanatory:

|

||||

|

||||

```python

|

||||

### Import stuff

|

||||

|

||||

import os

|

||||

import sys

|

||||

|

||||

import numpy as np

|

||||

import matplotlib.pyplot as plt

|

||||

|

||||

import tensorflow as tf

|

||||

from tensorflow import keras

|

||||

|

||||

######

|

||||

# Change this with the directory where you cloned the imgdetect-utils repo

|

||||

basedir = os.path.join(os.path.expanduser('~'), 'git_tree', 'imgdetect-utils')

|

||||

sys.path.append(os.path.join(basedir))

|

||||

|

||||

from src.image_helpers import plot_images_grid, create_dataset_files

|

||||

from src.train_helpers import load_data, plot_results, export_model

|

||||

from tensorflow.keras.preprocessing.image import ImageDataGenerator

|

||||

|

||||

# Define the dataset directory - replace it with the path on your local

|

||||

# machine where you have stored the previously labelled dataset.

|

||||

dataset_dir = os.path.join(basedir, 'datasets', 'ir')

|

||||

dataset_dir = os.path.join(os.path.expanduser('~'), 'datasets', 'people_detect')

|

||||

|

||||

# Define the size of the input images. In the case of an

|

||||

# MLX90640 it will be (24, 32) for horizontal images and

|

||||

|

|

@ -302,136 +306,252 @@ batch_size = 64

|

|||

|

||||

# Number of training epochs

|

||||

epochs = 5

|

||||

######

|

||||

|

||||

# The Tensorflow model and properties file will be stored here

|

||||

tf_model_dir = os.path.join(basedir, 'models', 'ir', 'tensorflow')

|

||||

tf_model_file = os.path.join(tf_model_dir, 'ir.pb')

|

||||

tf_properties_file = os.path.join(tf_model_dir, 'ir.json')

|

||||

# Instantiate a generator that puts 30% of the images into the validation set

|

||||

# and normalizes their pixel values between 0 and 1

|

||||

generator = ImageDataGenerator(rescale=1./255, validation_split=0.3)

|

||||

|

||||

# Base directory that contains your training images and dataset files

|

||||

dataset_base_dir = os.path.join(basedir, 'datasets', 'ir')

|

||||

dataset_dir = os.path.join(dataset_base_dir, 'data')

|

||||

train_data = generator.flow_from_directory(dataset_dir,

|

||||

target_size=image_size,

|

||||

batch_size=batch_size,

|

||||

subset='training',

|

||||

class_mode='categorical',

|

||||

color_mode='grayscale')

|

||||

|

||||

# Store your thermal camera images here

|

||||

img_dir = os.path.join(dataset_base_dir, 'images')

|

||||

|

||||

### Create model directories

|

||||

|

||||

os.makedirs(tf_model_dir, mode=0o775, exist_ok=True)

|

||||

|

||||

### Create a dataset files from the available images

|

||||

|

||||

dataset_files = create_dataset_files(img_dir, dataset_dir,

|

||||

split_size=1000,

|

||||

num_threads=1,

|

||||

resize=input_size)

|

||||

|

||||

### Or load existing .npz dataset files

|

||||

|

||||

|

||||

|

||||

dataset_files = [os.path.join(dataset_dir, f)

|

||||

for f in os.listdir(dataset_dir)

|

||||

if os.path.isfile(os.path.join(dataset_dir, f))

|

||||

and f.endswith('.npz')]

|

||||

|

||||

### Get the training and test set randomly out of the dataset with a split of 70/30

|

||||

|

||||

train_set, test_set, classes = load_data(*dataset_files, split_percentage=0.7)

|

||||

print('Loaded {} training images and {} test images. Classes: {}'.format(

|

||||

train_set.shape[0], test_set.shape[0], classes))

|

||||

|

||||

# Example output:

|

||||

# Loaded 623 training images and 267 test images. Classes: ['negative' 'positive']

|

||||

|

||||

# Extract training set and test set images and labels

|

||||

train_images = np.asarray([item[0] for item in train_set])

|

||||

train_labels = np.asarray([item[1] for item in train_set])

|

||||

test_images = np.asarray([item[0] for item in test_set])

|

||||

test_labels = np.asarray([item[1] for item in test_set])

|

||||

|

||||

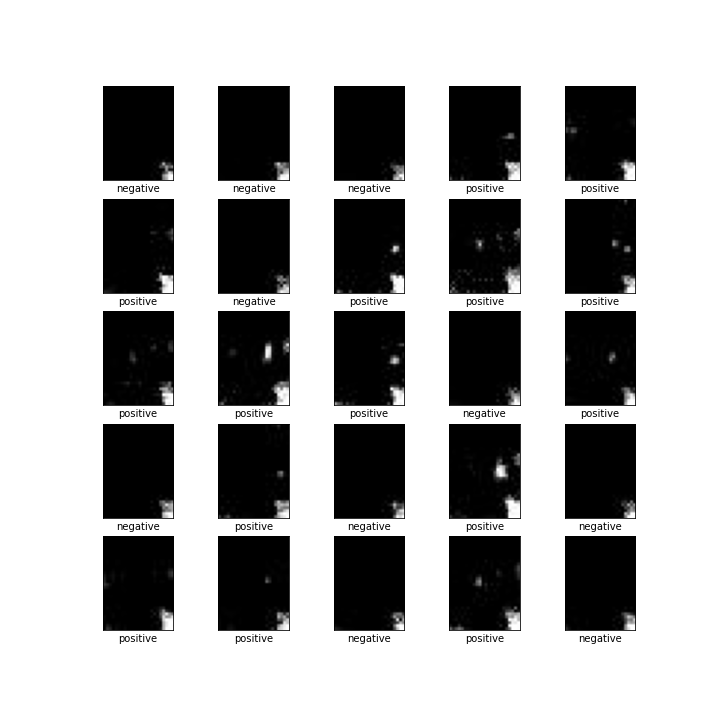

### Inspect the first 25 images in the training set

|

||||

|

||||

plot_images_grid(images=train_images, labels=train_labels,

|

||||

classes=classes, rows=5, cols=5)

|

||||

|

||||

### Declare the model

|

||||

|

||||

# - Flatten input

|

||||

# - Layer 1: 50% the number of pixels per image (RELU activation)

|

||||

# - Layer 2: 20% the number of pixels per image (RELU activation)

|

||||

# - Layer 3: as many neurons as the output labels

|

||||

# (in this case 2: negative, positive) (Softmax activation)

|

||||

|

||||

model = keras.Sequential([

|

||||

keras.layers.Flatten(input_shape=train_images[0].shape),

|

||||

keras.layers.Dense(int(0.5 * train_images.shape[1] * train_images.shape[2]),

|

||||

activation=tf.nn.relu),

|

||||

keras.layers.Dense(int(0.2 * train_images.shape[1] * train_images.shape[2]),

|

||||

activation=tf.nn.relu),

|

||||

keras.layers.Dense(len(classes), activation=tf.nn.softmax)

|

||||

])

|

||||

|

||||

### Compile the model

|

||||

|

||||

# - Loss function:This measures how accurate the model is during training. We

|

||||

# want to minimize this function to "steer" the model in the right direction.

|

||||

# - Optimizer: This is how the model is updated based on the data it sees and

|

||||

# its loss function.

|

||||

# - Metrics: Used to monitor the training and testing steps. The following

|

||||

# example uses accuracy, the fraction of the images that are correctly classified.

|

||||

|

||||

model.compile(optimizer='adam',

|

||||

loss='sparse_categorical_crossentropy',

|

||||

metrics=['accuracy'])

|

||||

|

||||

### Train the model

|

||||

|

||||

model.fit(train_images, train_labels, epochs=3)

|

||||

|

||||

# Example output:

|

||||

# Epoch 1/3 623/623 [======] - 0s 487us/sample - loss: 0.2672 - acc: 0.8860

|

||||

# Epoch 2/3 623/623 [======] - 0s 362us/sample - loss: 0.0247 - acc: 0.9936

|

||||

# Epoch 3/3 623/623 [======] - 0s 373us/sample - loss: 0.0083 - acc: 0.9984

|

||||

|

||||

### Evaluate accuracy against the test set

|

||||

|

||||

test_loss, test_acc = model.evaluate(test_images, test_labels)

|

||||

print('Test accuracy:', test_acc)

|

||||

|

||||

# Example output:

|

||||

# 267/267 [======] - 0s 243us/sample - loss: 0.0096 - acc: 0.9963

|

||||

# Test accuracy: 0.9962547

|

||||

|

||||

### Make predictions on the test set

|

||||

|

||||

predictions = model.predict(test_images)

|

||||

|

||||

# Plot a grid of 36 images and show expected vs. predicted values

|

||||

plot_results(images=test_images, labels=test_labels,

|

||||

classes=classes, predictions=predictions,

|

||||

rows=9, cols=4)

|

||||

|

||||

### Export as a Tensorflow model

|

||||

|

||||

export_model(model, tf_model_file,

|

||||

properties_file=tf_properties_file,

|

||||

classes=classes,

|

||||

input_size=input_size)

|

||||

test_data = generator.flow_from_directory(dataset_dir,

|

||||

target_size=image_size,

|

||||

batch_size=batch_size,

|

||||

subset='validation',

|

||||

class_mode='categorical',

|

||||

color_mode='grayscale')

|

||||

```

|

||||

|

||||

If you managed to execute the whole notebook correctly you’ll have a file named

|

||||

`ir.pb` under `models/ir/tensorflow`. That’s your Tensorflow model file, you can

|

||||

now copy it over to the RaspberryPi and use it to do predictions:

|

||||

After initializing the generators, let's take a look at a sample of 25 images from the training set together with their

|

||||

labels:

|

||||

|

||||

```python

|

||||

index_to_label = {

|

||||

index: label

|

||||

for label, index in train_data.class_indices.items()

|

||||

}

|

||||

|

||||

plt.figure(figsize=(10, 10))

|

||||

batch = train_data.next()

|

||||

|

||||

for i in range(min(25, len(batch[0]))):

|

||||

img = batch[0][i]

|

||||

|

||||

label = index_to_label[np.argmax(batch[1][i])]

|

||||

plt.subplot(5, 5, i+1)

|

||||

plt.xticks([])

|

||||

plt.yticks([])

|

||||

plt.grid(False)

|

||||

|

||||

# Note the np.squeeze call - matplotlib can't

|

||||

# process grayscale images unless the extra

|

||||

# 1-sized dimension is removed.

|

||||

plt.imshow(np.squeeze(img))

|

||||

plt.xlabel(label)

|

||||

|

||||

plt.show()

|

||||

```

|

||||

|

||||

You should see an image like this:

|

||||

|

||||

|

||||

|

||||

Let's now declare a model and train it on the given training set:

|

||||

|

||||

```python

|

||||

model = keras.Sequential([

|

||||

# Layer 1: flatten the input images

|

||||

keras.layers.Flatten(input_shape=image_size),

|

||||

# Layer 2: fully-connected layer with 80% the neurons as the input images

|

||||

# and RELU activation function

|

||||

keras.layers.Dense(round(0.8 * image_size[0] * image_size[1]),

|

||||

activation=tf.nn.relu),

|

||||

# Layer 2: fully-connected layer with 30% the neurons as the input images

|

||||

# and RELU activation function

|

||||

keras.layers.Dense(round(0.3 * image_size[0] * image_size[1]),

|

||||

activation=tf.nn.relu),

|

||||

# Layer 3: fully-connected layer with as many units as the output labels

|

||||

# and Softmax activation function

|

||||

keras.layers.Dense(len(train_data.class_indices),

|

||||

activation=tf.nn.softmax)

|

||||

])

|

||||

|

||||

# Compile the model for classification, use the Adam optimizer and pick

|

||||

# accuracy as optimization metric

|

||||

model.compile(loss='categorical_crossentropy',

|

||||

optimizer='adam',

|

||||

metrics=['accuracy'])

|

||||

|

||||

# Train the model in batches

|

||||

history = model.fit(

|

||||

train_data,

|

||||

steps_per_epoch=train_data.samples/batch_size,

|

||||

validation_data=test_data,

|

||||

validation_steps=test_data.samples/batch_size,

|

||||

epochs=epochs

|

||||

)

|

||||

|

||||

# Example output:

|

||||

# Epoch 1/5 loss: 0.2529 - accuracy: 0.9196 - val_loss: 0.0543 - val_accuracy: 0.9834

|

||||

# Epoch 2/5 loss: 0.0572 - accuracy: 0.9801 - val_loss: 0.0213 - val_accuracy: 0.9967

|

||||

# Epoch 3/5 loss: 0.0254 - accuracy: 0.9915 - val_loss: 0.0080 - val_accuracy: 1.0000

|

||||

# Epoch 4/5 loss: 0.0117 - accuracy: 0.9979 - val_loss: 0.0053 - val_accuracy: 0.9967

|

||||

# Epoch 5/5 loss: 0.0058 - accuracy: 1.0000 - val_loss: 0.0046 - val_accuracy: 0.9983

|

||||

```

|

||||

|

||||

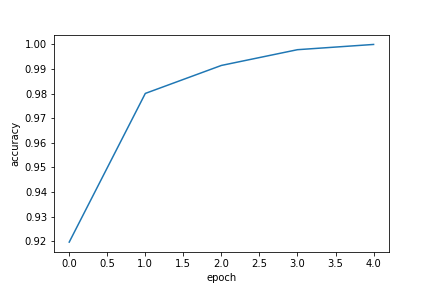

We can now see how the accuracy of the model progressed over the iteration:

|

||||

|

||||

```python

|

||||

epochs = history.epoch

|

||||

accuracy = history.history['accuracy']

|

||||

fig = plt.figure()

|

||||

plot = fig.add_subplot()

|

||||

plot.set_xlabel('epoch')

|

||||

plot.set_ylabel('accuracy')

|

||||

plot.plot(epochs, accuracy)

|

||||

```

|

||||

|

||||

The output should look like this:

|

||||

|

||||

|

||||

|

||||

By constraining the problem properly (i.e. translating "detect people in an image" to "infer the presence of people by

|

||||

telling if there are more white halos than usual in a small grayscale image") we have indeed managed to achieve high

|

||||

levels of accuracy both on the training and validation set despite using a relatively small dataset.

|

||||

|

||||

## Deploying the model

|

||||

|

||||

Once you are happy with the model, it's time to save it so it can be deployed to your RaspberryPi for real-time

|

||||

predictions:

|

||||

|

||||

```python

|

||||

def model_save(model, target, labels=None, overwrite=True):

|

||||

import json

|

||||

import pathlib

|

||||

|

||||

# Check if we should save it like a .h5/.pb file or as a directory

|

||||

model_dir = pathlib.Path(target)

|

||||

if str(target).endswith('.h5') or \

|

||||

str(target).endswith('.pb'):

|

||||

model_dir = model_dir.parent

|

||||

|

||||

# Create the model directory if it doesn't exist

|

||||

pathlib.Path(model_dir).mkdir(parents=True, exist_ok=True)

|

||||

|

||||

# Save the Tensorflow model using the .save method

|

||||

model.save(target, overwrite=overwrite)

|

||||

|

||||

# Save the label names of your model in a separate JSON file

|

||||

if labels:

|

||||

labels_file = os.path.join(model_dir, 'labels.json')

|

||||

with open(labels_file, 'w') as f:

|

||||

f.write(json.dumps(list(labels)))

|

||||

|

||||

model_dir = os.path.expanduser('~/models/people_detect')

|

||||

model_save(model, model_dir,

|

||||

labels=train_data.class_indices.keys(), overwrite=True)

|

||||

```

|

||||

|

||||

If you managed to execute the whole notebook then you’ll have your model saved under `~/models/people_detect`.

|

||||

You can now copy it over to the RaspberryPi and use it to do predictions (first create `~/models` on the RaspberryPi

|

||||

if it's not available already):

|

||||

|

||||

```shell

|

||||

scp $BASEDIR/models/ir/tensorflow/ir.pb pi@raspberry:/home/pi/models

|

||||

scp -r ~/models/people_detect pi@raspberry:/home/pi/models

|

||||

```

|

||||

|

||||

## Detect people in the room

|

||||

|

||||

Once the Tensorflow model has been deployed to the RaspberryPi you can replace the

|

||||

previous cronjob that stores pictures at regular intervals with a cronjob that captures

|

||||

pictures and feeds them to the previously trained model

|

||||

Once the Tensorflow model has been deployed to the RaspberryPi you can quickly test how it performs against some

|

||||

pictures taken on the device using

|

||||

the [`tensorflow.predict`](https://platypush.readthedocs.io/en/latest/platypush/plugins/tensorflow.html#platypush.plugins.tensorflow.TensorflowPlugin.predict)

|

||||

method:

|

||||

|

||||

```shell

|

||||

curl -XPOST -u 'user:pass' -H 'Content-Type: application/json' -d '

|

||||

{

|

||||

"type":"request",

|

||||

"action":"tensorflow.predict",

|

||||

"args": {

|

||||

"inputs": "~/datasets/people_detect/positive/some_image.jpg",

|

||||

"model": "~/models/people_detect"

|

||||

}

|

||||

}' http://your-raspberry-pi:8008/execute

|

||||

```

|

||||

|

||||

Expected output:

|

||||

|

||||

```json

|

||||

{

|

||||

"id": "<response-id>",

|

||||

"type": "response",

|

||||

"target": "http",

|

||||

"origin": "raspberrypi",

|

||||

"response": {

|

||||

"output": {

|

||||

"model": "~/models/people_detect",

|

||||

"outputs": [

|

||||

{

|

||||

"negative": 0,

|

||||

"positive": 1

|

||||

}

|

||||

],

|

||||

"predictions": [

|

||||

"positive"

|

||||

]

|

||||

},

|

||||

"errors": []

|

||||

}

|

||||

}

|

||||

```

|

||||

|

||||

Once the structure of the response is clear, we can replace the previous cronjob that stores pictures at regular

|

||||

intervals with a new one that captures pictures and feeds them to the previously trained model to make predictions (I'll

|

||||

use a Python script stored under `~/.config/platypush/scripts` in this case, but it will also work with a cron defined

|

||||

in YAML in `config.yaml`) and, for example, turns on the lights when presence is detected and turns them off when

|

||||

presence is no longer detected (I'll use

|

||||

the [`light.hue`](https://platypush.readthedocs.io/en/latest/platypush/plugins/light.hue.html) plugin in this example):

|

||||

|

||||

```python

|

||||

import os

|

||||

from platypush.context import get_plugin

|

||||

from platypush.cron import cron

|

||||

|

||||

|

||||

@cron('* * * * * */30')

|

||||

def check_presence(**context):

|

||||

# Get plugins by name

|

||||

camera = get_plugin('camera.ir.mlx90640')

|

||||

tensorflow = get_plugin('tensorflow')

|

||||

lights = get_plugin('light.hue')

|

||||

|

||||

image_file = '/tmp/frame.jpg'

|

||||

model_file = os.path.expanduser('~/models/people_detect/saved_model.h5')

|

||||

camera.capture_image(

|

||||

image_file=image_file, grayscale=True)

|

||||

|

||||

prediction = tensorflow.predict(

|

||||

inputs=image_file, model=model_file)['predictions'][0]

|

||||

|

||||

if prediction == 'positive':

|

||||

lights.on()

|

||||

else:

|

||||

lights.off()

|

||||

```

|

||||

|

||||

Restart the service and let it run. Every 30 seconds the cron will run, take a picture, check if people are detected in

|

||||

that picture and turn the lights on/off accordingly.

|

||||

|

||||

## What's next?

|

||||

|

||||

That’s your call! Feel free to experiment with more elaborate rules, for example to change the status of the music/video

|

||||

playing in the room when someone enters, using Platypush media plugins. Or say a custom good morning text when you first

|

||||

enter the room in the morning. Or build your own surveillance system to track the presence of people when you’re not at

|

||||

home. Or enhance the model to detect also the number of people in the room, not only the presence. Or you can combine it

|

||||

with an optical flow sensor, distance sensor, laser range sensor or optical camera (platypush provides plugins for many

|

||||

of them) to build an even more robust system that also detects and tracks movements or proximity to the sensor, and so

|

||||

on.

|

||||

|

|

|

|||

Loading…

Add table

Add a link

Reference in a new issue